AI Agent Routing: Tutorial & Examples

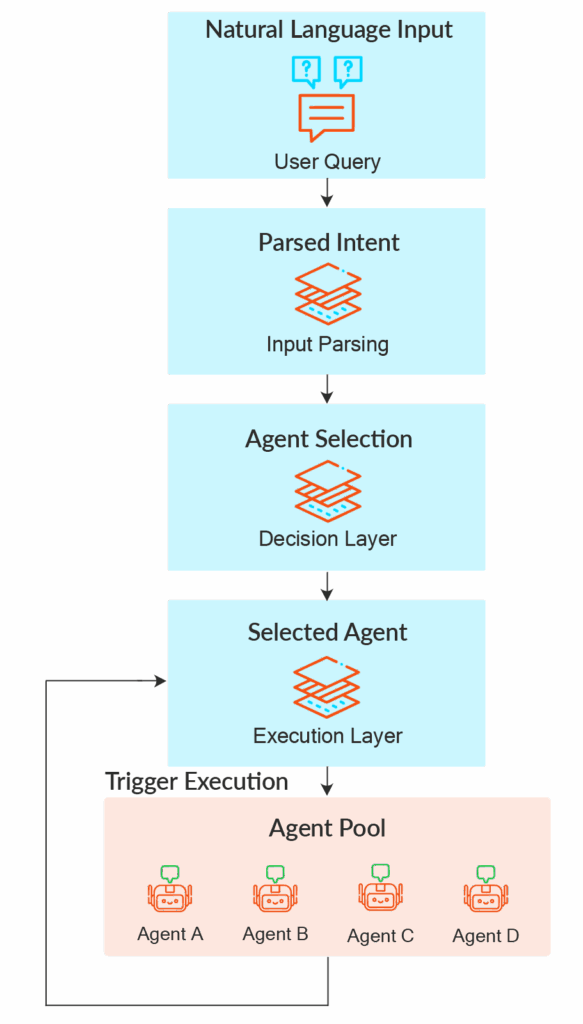

AI agent routing determines which agent, function, or system is best suited to handle an upcoming request. It acts as a decision layer and routes the user query to the relevant part of the system. Routing decisions can be made statically or dynamically using a hybrid approach.

AI agent routing must be considered during the agent architecture and system design phase, as it is crucial to the AI system’s scalability, extensibility, speed, and cost. This article explores the various design patterns and best practices for implementing AI agent routing.

Summary of key AI agent routing concepts

The table below provides a brief overview of each type of routing mechanism and its description.

| Routing mechanism | Description |

|---|---|

| Rule-based routing | Routes the request based on pre-defined or hard-coded rules. |

| Semantic routing | Routes the request based on the semantic meaning of the query (language used). |

| Intent-based routing | Routes the request based on what the user wants to accomplish, rather than just the words used. |

| LLM-based routing | Uses a large language model to analyze the requests and send them to an AI agent or call a tool accordingly. |

| Hierarchical agent routing | Uses a high level agent to determine which worker agent should handle the task. The agents can be provided with tools to accomplish the tasks (function calling). |

| Auction-based routing | The process involves multiple agents “bidding” on how confident they are in handling the request, and the highest bidder has the request routed to them. |

Understanding AI agent routers

The AI agent router is the first destination of a user query. It typically consists of three components.

Decision Layer

The decision agent is the routing system’s “brain”. It analyzes the user request to determine which AI agent, tool, or system is best suited to handle the request based on specified criteria.

Input Parsing

Depending on how the decision-making layer is designed, you may need to standardize the input. This component is responsible for parsing and processing user queries to meet the formatting requirements of the decision-making layer. This largely depends on the use case. In some cases, the parsed user query becomes input to the decision component.

Execution Layer

This component orchestrates the actual handoff of the user query to the selected agent, tool, or system. The execution layer collects and potentially processes the results before returning them to the user.

Types of AI agent routing

You can implement the decision layer of the agent routing system in several ways depending on the use case.

Rule-based Routing

Rule-based routing, sometimes called logical routing, uses pre-defined or hard-coded rules and conditions for decision making. This routing type is suitable for simpler use cases where the queries fall into well-defined categories with little or no room for divergence. It ensures the system behavior is predictable. The simplest way to implement rule-based routing is to use an if-else statement.

Semantic Routing

Semantic routing makes decisions based on the user’s query language. It identifies queries that might be phrased differently but express similar meanings.

Depending on the system’s complexity, there are several ways of implementing semantic routing.

- Use small or large language models to interpret and reason over the meaning of the user query.

- Use pre-trained intent classification models or third-party NLP tools like spaCy, which uses pre-trained word vectors.

- Use libraries like semantic_router, which uses pre-trained embedding models like OpenAI. Many more similar tools are available.

A typical real-world use case for semantic routing is customer support chatbots. Users can ask various queries, such as “I lost my package” or “my order hasn’t arrived yet.” Although worded differently, these queries would be routed to the shipping issue agent as they share the same intent instead of the billing or returns agent.

Intent-based Routing

Intent-based routing works by determining user intent from the query and mapping the query to a predefined function from a list of functions. Since intent-based routers work through pre-defined functionality, this design is not as intelligent as some other types of routing and may not do well with ambiguous queries.

Intent-based routing can be implemented in multiple ways. It can be as simple as keyword-based matching or as advanced as language models, third-party tools, or an intent classifier.

A restaurant chatbot is a good use case where intent routing can be used. Users can input queries such as “I want to book a table” or “I want to cancel a dinner booking”. In this scenario, intent-based routing works well because the possible user goals (booking, cancelling, asking about hours, etc) are known in advance.

LLM-based Routing

LLMs can make routing decisions based on contextual understanding and interpret user requests as needed. They can provide highly accurate answers for complex and ambiguous queries. Since LLMs are at the core of the decision in this case, you can also get insights into the reasoning that led the model to choose a particular path.

However, some obvious disadvantages compared to previous routings are higher query cost and latency. LLM-based routing may be over-engineering if you deal with a more straightforward use case. In addition, LLMs are prone to hallucinations and may lead to incorrect routing.

A complex use case where LLM routing would be essential is a multi-agent enterprise AI assistant, where a single chat window handles technical support, legal analysis, HR queries, customer insights, and financial modeling. Users can ask complex, multi-intent questions such as “Compare our performance of Q1 and Q4 in the last year and draft a financial analysis document based on the difference.”

The LLM router would interpret the composite, multi-intent inputs and route each to the appropriate agent. A use case like this is where other routing methods would fall short. Traditional rule-based and intent classification systems would struggle to handle this level of contextual understanding and task decomposition.

Hierarchical Routing

Hierarchical routing is for systems with a “high-level” agent and a group of “worker” agents, each with its own role and specialization. The high-level agent determines which agent is the best fit to handle the query. Hierarchical routing is a valuable strategy in enterprise systems where a group of agents works towards a specific goal, and when there are clearly defined hierarchies.

Hierarchical routing has some overlap with LLM-based routing, especially when the master agent itself is an LLM. In these cases, the LLM plays the supervisory role by reasoning over the context, delegating tasks to the right agents, interpreting user queries, etc.

However, hierarchical routing does not necessarily require an LLM – you can implement it with less advanced machine learning models or rule-based routing. The key distinction between hierarchical routing and LLM-based routing is the structure – hierarchical routing emphasizes tiered decision making. In contrast, LLM-based routing focuses more on semantic reasoning without requiring hierarchy.

The advantage of hierarchical routing is the flexibility for scaling and defining a group of specialized AI agents and their “supervisor” (master agent), who ideally fall into the same professional domain. You can define layers of such a hierarchy; however, faulty decisions can propagate and negatively impact the final outcome.

Auction-based Routing

Auction-based routing is where more than one agent “bids” a score based on their confidence level in handling the given query. It’s a “may the best agent win” approach. Auction-based routing is a good choice when you need to handle a use case that contains intersecting practices. An example of this can be a group of legal domain expert agents, each specializing in a particular branch of law. When a query about a legal matter comes in, each lawyer agent bids a score that represents its confidence in the response, and the query will be handled by the highest bidder, implying that the chosen lawyer agent, along with its particular specialization in law, is the most appropriate to handle the query. Auction-based routing introduces some latency due to the bidding process and may introduce cost overheads.

Building an AI agent routing system

Here is a hands-on coding example of the most straightforward routing strategy: rule-based routing. For that, you can use LangGraph, a popular AI agent framework.

We are implementing an online learning center agent where students sign up to get help with their homework. After signing up, users can submit their questions, and the system will automatically route them to the right tutor. Let’s consider three tutors for this exercise.

- An AI mathematics tutor who helps with math homework, physics problems, and any problem that includes formulas, calculations, and numbers.

- An AI language tutor specializing in English and French grammar, writing, vocabulary, and more.

- A general AI tutor that answers all other questions like history, geography, biology, etc., and anything not related to the above two specializations. It means that a question is sent to a general tutor when it doesn’t fit into the domain of expertise of math and language tutors.

First, let’s install the necessary dependencies. For this example, you only need to install LangGraph and the OpenAI client.

pip install -U langgraph

pip install openai>=1.0.0Import the required libraries and initialize the OpenAI client by providing your API key directly. However, loading the API key from your environment is generally recommended for better security and flexibility.

from openai import OpenAI

from langgraph.graph import StateGraph, END

client = OpenAI(api_key=<INSERT_YOUR_OPENAI_API_KEY_HERE>)Define a state object. The state object tells LangGraph the shape of the data that you will be passing between nodes.

class LearningState(dict):

input: str

output: strNow, let’s define the tutor functions. For the sake of simplicity, the math and language tutors return a fixed string, which includes a fixed prefix concatenated to the question asked. You will use an OpenAI call to simulate the general tutor.

def math_tutor(state: LearningState) -> LearningState:

question = state["input"]

response = f"[Math Tutor] Let's solve this together: '{question}'"

return {"input": question, "output": response}

def language_tutor(state: LearningState) -> LearningState:

question = state["input"]

response = f"[Language Tutor] Great question about language: '{question}'"

return {"input": question, "output": response}

def general_tutor(state: LearningState) -> LearningState:

question = state["input"]

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": question}],

temperature=0

)

answer = response.choices[0].message.content

return {"input": question, "output": f"[General Tutor] {answer}"}Here’s the key to rule-based routing – the routing function. This function has an if-else statement with hard-coded conditions determining the agent to route the question to.

def route_question(state: LearningState) -> LearningState:

question = state["input"].lower()

if any(word in question for word in ["math", "solve", "+", "-", "*", "/", "equation", "physics", "calculate", "integral", "derivative"]):

return {"input": state["input"], "output": "", "next": "math"}

elif any(word in question for word in ["english", "french", "translate", "grammar", "sentence", "spelling", "language"]):

return {"input": state["input"], "output": "", "next": "language"}

else:

return {"input": state["input"], "output": "", "next": "general"}Now that the functions are defined, you can build the graph by adding the necessary nodes and edges, along with a conditional edge where a function determines which node to go to first.

graph = StateGraph(LearningState)

graph.add_node("math", math_tutor)

graph.add_node("language", language_tutor)

graph.add_node("general", general_tutor)

graph.add_node("router", route_question)

graph.add_conditional_edges(

"router",

lambda state: state["next"],

{

"math": "math",

"language": "language",

"general": "general"

}

)

graph.set_entry_point("router")

graph.add_edge("math", END)

graph.add_edge("language", END)

graph.add_edge("general", END)

app = graph.compile()You have now defined the graph definition and compiled it into a runnable. Now, you can use Langchain methods to run the graph. Below is a list of examples to test how well the rule-based routing performs.

examples = [

"Can you solve 3x + 7 = 16?",

"Translate 'Good morning' to French",

"Who invented the microscope?",

"Check grammar: 'He go to school'",

"What is the integral of x squared?",

]

for question in examples:

print(f"Student: {question}")

result = app.invoke({"input": question})

print("Tutor:", result["output"])

print("-" * 50)The output of the code will be as follows. Analyzing the output below shows that the implementation yields accurate results, correctly routing each question to the appropriate agent.

Student: Can you solve 3x + 7 = 16?

Tutor: [Math Tutor] Let's solve this together: 'Can you solve 3x + 7 = 16?'

--------------------------------------------------

Student: Translate 'Good morning' to French

Tutor: [Language Tutor] Great question about language: 'Translate 'Good morning' to French'

--------------------------------------------------

Student: Who invented the microscope?

Tutor: [General Tutor] The microscope was invented by Dutch spectacle maker Zacharias Janssen and his father Hans in the late 16th century.

--------------------------------------------------

Student: Check grammar: 'He go to school'

Tutor: [Language Tutor] Great question about language: 'Check grammar: 'He go to school''

--------------------------------------------------

Student: What is the integral of x squared?

Tutor: [Math Tutor] Let's solve this together: 'What is the integral of x squared?'

--------------------------------------------------No-code and low-code AI agent routing alternative

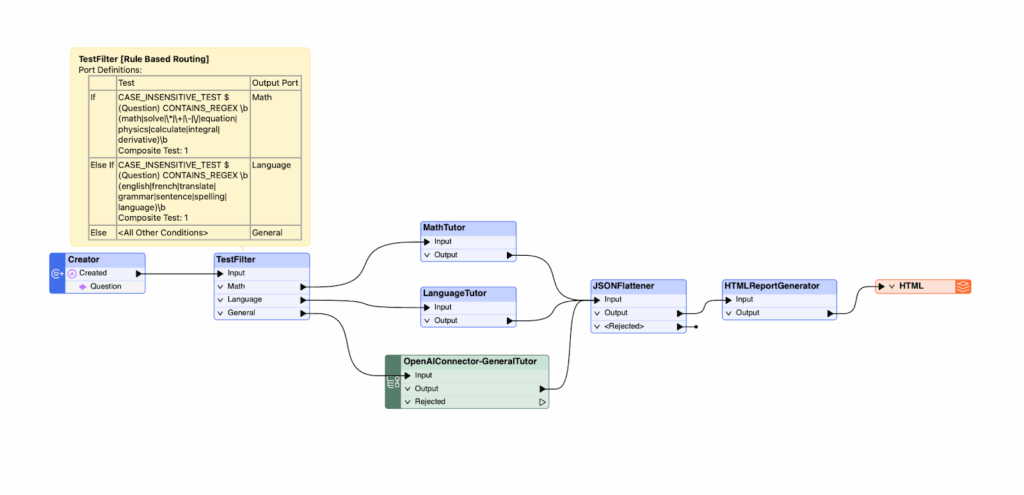

Implementing rule-based agent routing using LangGraph took less than 50 lines of code. There is an even simpler and more streamlined approach to implement agent routing using Safe Software’s FME – a low-code / no-code platform that enables you to achieve the same outcome with just a few clicks. Its intuitive drag-and-drop interface accelerates the development process and provides a high-level, visual overview of the workflow components.

For example, attempting to build the routing workflow that we implemented in LangChain, you will only need to drag and drop a few blocks in the user interface and configure the prompts. This is how it looks in the FME workbench application.

The implementation in FME Workbench involves connecting several transformers. The first transformer, named ‘Creator’, takes the user input and feeds it into a text filter. The ‘TextFilter’ transformer decides the routing based on rules and routes it to ‘MathTutor’, ‘LanguageTutor’, or a ‘GeneralTutor’ based on OpenAI. Implementing the general tutor using OpenAI is as simple as selecting the OpenAI transformer from the comprehensive list of FME transformers and configuring the required prompt and API key. The output is then combined as a JSON, and a report is generated as a JSON. All this is possible with just a number of drag-and-drop operations within the FME workbench.

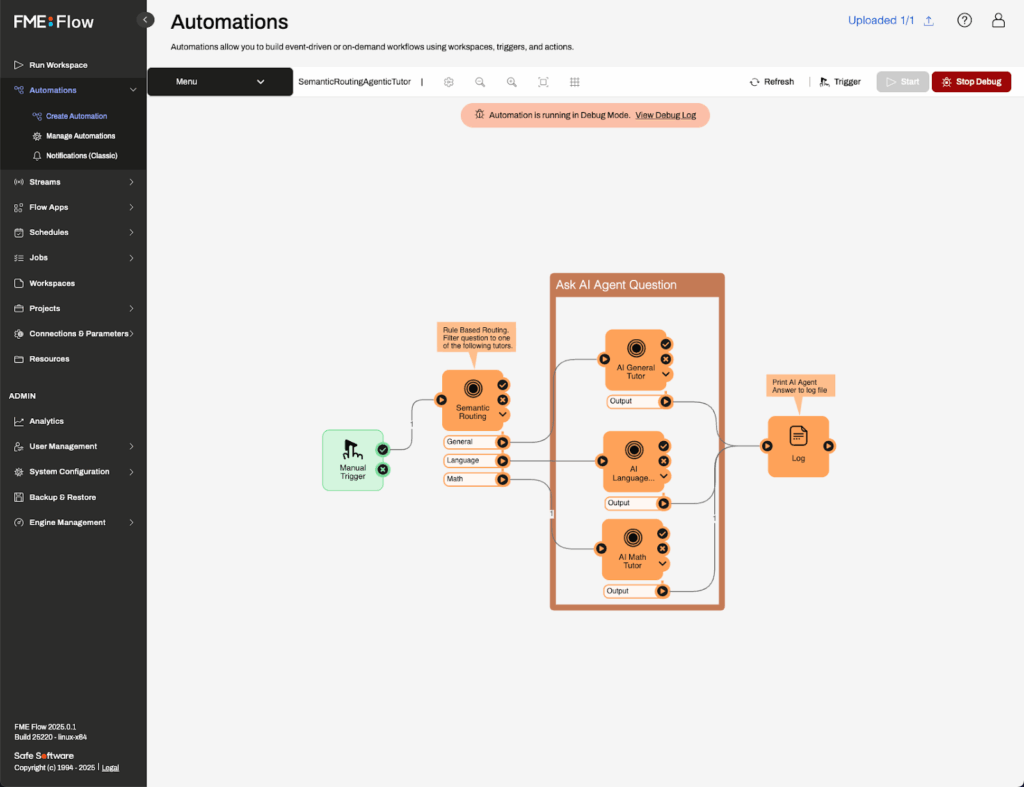

Once the design is done in the FME workbench, the application can be deployed in the cloud-based FME flow environment, where it can be run. Deployment is done by uploading the files created from the FME workbench to the FME flow. Once deployed, users can use the FME flow interface to run and monitor the agentic application.

Best practices for implementing agent routing

You can follow several best practices to ensure the effectiveness of your AI agent routing implementation.

Use unambiguous routing criteria with guardrails

Ambiguous routing criteria can lead to errors that are hard to debug. Define clear, preferably distinct conditions for each routing path to decrease ambiguity and, ideally, achieve a one-to-one mapping of an incoming user request and paths. Implement a default or fall-back path to handle ambiguous queries that do not precisely fall into defined routes. Also, document the reasoning behind each routing decision for explainability and future troubleshooting.

Measure the accuracy of routing separately

Define metrics specifically for the routing logic. Calculating the metrics of the end-to-end system can result in misleading numbers. Since routing is a key component, it is better to measure its performance independently. Developers must define a clear metric to measure the accuracy of the router and system performance. A good idea is to design a diverse sample test set that covers common and edge cases, to validate and test the routing logic.

Consider the cost of routing

An agent with a router-based architecture sends every input it receives through the additional routing layer. This means at least twice the LLM calls compared to a single agent system. It is essential to balance the computational expenses of fancy routing design against the benefits of a simpler one for agent selection. Consider various factors, such as response time, resource utilization, model expenditure, etc, when choosing a routing architecture.

Have a fallback route in case there are no feasible routes

Deploying agents to production in a consumer-facing mode requires several precautions to ensure that users do not receive an erroneous response because of routing failure. Developers should consistently implement a reliable fall-back or default path to handle requests when specialized agents are unavailable or not the right fit for the query. Ensure the fall-back path takes all necessary precautions and produces a helpful, informative response.

Explore adding feedback loops to improve routing

The end user does the best evaluation of an agent’s performance. Capturing the end user experience and incorporating that into the routing logic helps to improve the agent’s performance continuously. Introduce a feedback mechanism with well-defined success metrics that let the user evaluate the provided answer and, hence, the routing decisions. The feedback mechanism can be as simple as an ‘agree’ or ‘disagree’ button that comes up with every response from the agent. The feedback mechanism results can be used later to enhance the routing strategy.

Last thoughts

This article covered various types of AI agent routing and their advantages and disadvantages. One can build highly intelligent agentic systems through code frameworks like LangGraph. Open source technologies provide flexibility, but requires a deep understanding of the frameworks and programming. No-code platforms like FME eliminate the need for deep engineering expertise. FME helps implement agent routing with a few clicks and configurations, enabling organizations to harness the power of LLMs with their data.

Continue reading this series

AI Agent Architecture: Tutorial & Examples

Learn the key components and architectural concepts behind AI agents, including LLMs, memory, functions, and routing, as well as best practices for implementation.

AI Agentic Workflows: Tutorial & Best Practices

Learn about the key design patterns for building AI agents and agentic workflows, and the best practices for building them using code-based frameworks and no-code platforms.

AI Agent Routing: Tutorial & Examples

Learn about the crucial role of AI agent routing in designing a scalable, extensible, and cost-effective AI system using various design patterns and best practices.

AI Agent Development: Tutorial & Best Practices

Learn about the development and significance of AI agents, using large language models to steer autonomous systems towards specific goals.

AI Agent Platform: Tutorial & Must-Have Features

Learn how AI agents, powered by LLMs, can perform tasks independently and how to choose the right platform for your needs.

AI Agent Use Cases

Learn the basics of implementing AI agents with agentic frameworks and how they revolutionize industries through autonomous decision-making and intelligent systems.

AI Agent Tools: Tutorial & Example

Learn about the capabilities and best practices for implementing tool-calling AI agents, including a Python-based LangGraph example and leveraging FME by Safe for no-code solutions.

AI Agent Examples

Learn about the core architecture and functionality of AI agents, including their key components and real-world examples, to understand how they can complete tasks autonomously.

No Code AI Agent Builder

Learn the benefits and limitations of no-code AI agent builders and how they democratize AI adoption for businesses, as well as the key components and features of these platforms.

Multi-Agent Systems: Implementation Best Practices

Learn about multi-agent systems and how they improve upon single-agent workflows in handling complex tasks with specialised roles, communication, coordination, and orchestration.

Langgraph Alternatives: The Top 6 Choices

Learn about LangGraph, a powerful yet complex orchestration framework for building intelligent systems, and its limitations, alternatives, and selection criteria.

Agentic AI vs Generative AI

Learn the differences between generative AI and agentic AI and how to choose the right AI paradigm for your needs.