Bringing Large Geospatial Datasets to the Web

Last week Dale wrote about the problems surrounding large geospatial datasets. That got me thinking about large geospatial datasets from another angle, delivering them to the web browser.

The web has evolved over the past few years at a ferocious rate and the applications people are now building are phenomenal. The shift within the geospatial world to the web has also been dramatic with the web application frameworks such as ArcGIS Server JavaScript API, Bing, Google Maps and OpenLayers bringing spatial capabilities to the fingertips of normal web developers. Yet as a GIS professional, I still don’t live in my web browser like I do in other areas of my work. I think one of the main issues holding back GIS applications moving fully to the web is the size and complexity of the datasets we work with.

Google was the first mainstream company to bring large geospatial datasets to the web when they launched Google Maps back in 2005. Google Maps delivers the data seamlessly to the user by serving up many small tiles, the client (web browser) then reassembles them into one big image at the end. All other mapping frameworks have since used similar methodologies.

Google was the first mainstream company to bring large geospatial datasets to the web when they launched Google Maps back in 2005. Google Maps delivers the data seamlessly to the user by serving up many small tiles, the client (web browser) then reassembles them into one big image at the end. All other mapping frameworks have since used similar methodologies.

Serving static map tiles is very fast but just doesn’t cut it for the GIS professional as we often want to see and manipulate our vector data (points, polylines and polygons). Displaying vector data as overlays on our mapping framework of choice is easy with several commercial and open source solutions available which allow you to create a mapping service and free your data as a web friendly format such as KML or GeoRSS. The problem arises once this data reaches the web browser.

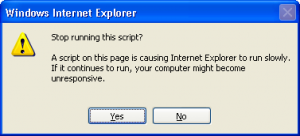

The limitation actually lies with the way the web browser stores and manipulates the data. When we build complex web applications we rely on JavaScript (you can use Flash, Java, etc too – but that’s a whole other discussion) to add the data to the map and manipulate it. JavaScript has very limited access to processing power and we always have to work with the weakest link which is usually an old version of Internet Explorer (comparison of JavaScript processing times).

So we have established that working with large datasets in the web browser is difficult. However, there are several things we can do on both the client/server-side to help abate the issue:

- Only return data that the user needs to see.

This is the single most important point. When requesting the data the client should send back its current bounding box and the service should be configured to read the bounding box and return the data within/in immediate proximity of the box. You usually need to attach the client request to the mouse move event on the map in some way. Adding the zoom level to the request is also usually required (or you can configure this on the server), as if you send back a bounding box covering the entire of North America, you don’t want to return all points within that area. Instead you may want to just show one point per state if it contains any data and style it differently.Another way to limit the data returned is to allow the client is to pass filter parameters back, such as ID or feature class, which help further narrow down the selection.

- Only return the exact attributes you need.

Whatever you return to the client, the browser is going to have to store in memory. Therefore, the less you send back the better. Make sure you strip out all unrequired attributes; do your users really need to see the OBJ_ID column? - Simplify the geometry.

If you are working with polylines or polygons you may want to think about simplifying your geometry. For example, if you have the road network for the entire state in the database derived from survey points you likely don’t need this level of detail for overlaying the road on a map. Using an interpolation technique such as generalization can reduce down the number of points considerably.

In my personal opinion the web is still a long way from being able to handle extremely large datasets (though companies like WeoGeo are doing some innovative things). Using the techniques outlined above,you can however allow your users to work with large datasets by ensuring they are only working with a small sub-set of data at any one time. In my next installment we will look at emerging technologies in HTML5 which will help us bring large datasets to the web and mobile devices.

These are the main techniques I use when bringing large datasets to the web, does anyone else have any other methods?