Key takeaways:

- Data quality ensures accuracy; data governance manages policies and processes. Good data quality is the foundation of effective data governance.

- Automation is the only practical way to scale data quality and governance, and FME makes it possible to embed these controls directly into data pipelines.

- When quality and governance are built into data processes, organizations move from reactive cleanup to proactive, accountable data management.

Data validation and governance are essential for any organization that wants to trust its analytics, scale automation, adopt AI, and meet regulatory expectations. In our recent webinar, experts from Safe Software and IMGS explored how FME-powered automation makes data quality and governance achievable at scale. This blog recaps the key ideas. For a deep dive into the topic, including technical tips, watch the webinar recording: How Automation Powers Data Quality and Governance at Scale.

Data Quality vs. Data Governance

Data quality and data governance address different aspects of working with data. Data quality is concerned with how accurate, complete, consistent, valid, and timely your data is—in short, whether it can be trusted for analysis and decision-making. Data governance focuses on how data is managed across the organization, including the policies, processes, roles, and standards that ensure data is used securely and consistently.

In other words, data quality forms the foundation, while governance is the structure built on top. Without reliable data, even the most carefully designed governance framework will struggle to deliver value.

Why Automation Matters

Most organizations deal with fragmented data environments. Data flows in from many systems, quality checks are often manual or inconsistently applied, and problems are discovered only after the data has already been used. As data volumes and complexity increase, these approaches simply do not scale.

Automation changes this dynamic. By embedding quality and governance checks directly into data workflows, organizations can apply the same rules every time, catch issues early, and generate consistent evidence for auditing and compliance. Instead of relying on one-off fixes or tribal knowledge, data teams gain repeatable, measurable processes that hold up as the organization grows.

The Automation Platform: FME

FME provides an end-to-end platform for building, running, and operationalizing automated data workflows:

- Author data integration and validation logic in FME Form, e.g. checking geometry, attributes, and schema.

- Automatically run the workflow using scheduling, triggers, monitoring, and notifications with FME Flow.

The FME Platform enables organizations to reliably move, transform, validate, and govern data at scale. It can connect to databases, files, APIs, cloud platforms, and emerging data types, allowing organizations to standardize quality and governance practices across their entire data landscape.

Improving Data Quality with Automation

Integrating Disconnected Data

A common source of poor data quality is fragmentation. Multiple datasets may represent the same real-world entities, each containing partial or conflicting information. Automated integration workflows can bring these sources together, resolve duplicates, standardize schemas, and create a more reliable single source of truth. Because these processes are automated, they can run consistently as new data arrives rather than relying on periodic cleanup efforts.

Measuring and Reporting Data Quality

Data quality often feels subjective until it is measured. Automation makes it possible to calculate concrete metrics such as field completeness, validity rates, duplicate counts, and geometry errors. These metrics can then be published as data health reports, spreadsheets, or dashboard inputs, making quality visible across the organization.

Once quality is quantified, teams can track improvement over time, prioritize remediation work, and communicate clearly about the fitness of a dataset for specific use cases.

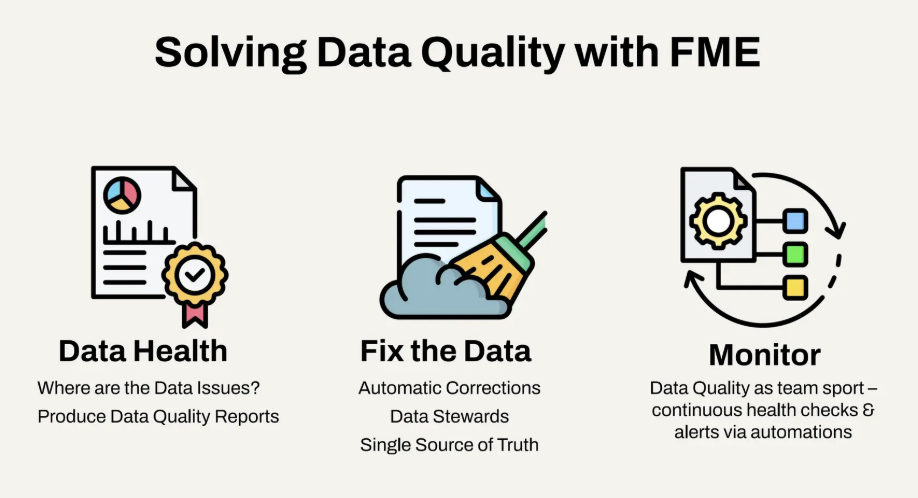

Validating and Handling Issues

FME includes a wide range of validation and cleansing capabilities that allow organizations to enforce business rules, detect invalid data, and identify inconsistencies. In some scenarios, issues can be corrected automatically. In others, automation is used to flag problems and route them back to data stewards so they can be fixed at the source. This approach avoids silently masking issues downstream while still preventing bad data from spreading.

Continuous Monitoring

Because data is constantly changing, quality checks cannot be a one-time exercise. Automated workflows can run on ingestion, on a schedule, or in response to upstream changes, ensuring that regressions are caught early. Alerts and reports keep teams informed without requiring constant manual oversight.

Data Quality Is a Shared Responsibility

Data quality is a team effort. It involves the people who create data, those who integrate and manage it, and those who analyze and consume it. Automation supports this collaboration by producing shared, objective outputs—such as reports and dashboards—that everyone can understand and act on.

From Quality to Governance

Once data quality processes are automated and measurable, governance becomes far more achievable. Many governance challenges stem from a lack of visibility: unclear lineage, inconsistent standards, or uncertainty about who owns which data. Automation helps address these issues by embedding governance controls directly into data pipelines rather than relying solely on documentation.

Automating Metadata and Data Understanding

Metadata is essential for governance, but it is often neglected because maintaining it manually is time-consuming. With FME, organizations can automatically scan datasets to extract schema information, generate data dictionaries, and analyze field distributions. Because this metadata is derived directly from live data, it stays current and reflects reality rather than becoming stale documentation.

The resulting outputs, such as spreadsheets or shared reports, make it easier for analysts, engineers, and governance teams to understand what data exists, how it is structured, and how it should be used.

Supporting Compliance and Accountability

Strong data governance is ultimately about accountability. Automated workflows make it possible to demonstrate that checks were performed, standards were enforced, and non-compliant data was handled appropriately. This is especially important when working with regulated data, personal information, or external data suppliers, where organizations must be able to show how data is controlled and protected.

Conclusion

Automation is essential for data quality and governance at scale. By automating processes with FME, organizations can build trust in their data, reduce risk, and unlock more value from their analytics and AI initiatives.

More resources: