AI Agent Tools: Tutorial & Example

Large language models (LLMs) have evolved far beyond traditional tasks like document summarization and email drafting. Today, they power AI agents capable of generating complex SQL queries, suggesting production-ready code changes, performing web searches, interpreting bill images for automated reimbursements, and integrating with external applications to process data within agentic workflows.

AI agents are becoming standard tools across both technical and nontechnical organizations. What began with tools like ChatGPT has expanded into a broader ecosystem, including the Model Context Protocol (MCP), GitHub Copilot, Claude Code, Cursor, and others. They are used for tasks ranging from data integration to agentic code editors, with domain-specific agents for SQL, Excel, and many other applications.

In this article, we review what AI agents are, how tool calling works within agent frameworks, and how these capabilities can be leveraged across different use cases. We also implement a simple LangGraph-based agent in Python that utilizes tools such as web search and image generation. We provide a quick overview of FME by Safe Software, a no-code platform designed for technical professionals with little or no prior coding experience, which builds agentic workflows using hundreds of prebuilt tools and integrations. We then implement the same Langgraph example using FME and demonstrate how easy it is to implement in a no-code environment. We conclude by sharing our closing thoughts and outlining some best practices for integrating tool-calling capabilities into your own agents.

Summary of key concepts related to AI agent tools

| Concept | Description |

|---|---|

| What are AI agents? | AI agents are LLM-based autonomous systems that can dynamically plan, reason, and use appropriate tools from the environment to accomplish tasks. |

| What are AI agent tools? | AI agent tools enable AI agents to interact with various environments, databases, or external services, either through data inputs or APIs. They allow agents to gather and leverage additional information within ongoing tasks, resulting in more accurate and context-aware outcomes. |

| Leveraging tool calling and function calling capabilities for different use cases | The tool-calling capabilities of AI agents play a crucial role in enhancing the accuracy and creativity of agents in achieving a specific goal. Tools like SQL database chains can now help agents create SQL queries, fetch data from them, and use them in other processes. Similarly, tools like code interpreters help agents perform mathematical calculations accurately since language models generally are not good at calculation themselves. |

| Implementing a Python-based LangGraph agent with tool-calling capabilities. | There are numerous frameworks available in the market for building AI agents from scratch in Python; some popular ones include LangGraph, Crew AI, AutoGen, LangChain, and Semantic Kernel. Each has its own advantages and disadvantages. |

| Leveraging FME by Safe | Aggregating data from diverse sources with varying schemas and data types into a standard, structured format can be a complex and time-consuming task, especially for domain-specific applications. You can build these agentic workflows in Python, but FME by Safe provides a no-code solution that can help you get started quickly. |

| Best practices for building tool/function-calling AI agents | Using tool-calling and function-calling in custom domain-specific workflows requires proper setup. Each tool should have a clear definition, with guidance on when to use it and when not to. It is also essential to manage the context of the AI agent properly so that irrelevant context is not passed, as it can lead to hallucinations or a drop in accuracy. |

Understanding AI agents and their components

AI agents are LLM-based autonomous systems that dynamically plan, reason, and use appropriate tools to accomplish tasks. Unlike traditional workflows requiring manual intervention at each step, agents are goal-oriented and create their own execution paths. This autonomy means their routes for solving identical problems may vary based on context and available information. For example, when you ask an agent to build a website for a GitHub repository, you provide the end goal and necessary tools without implementing prompt chains or manual LLM orchestration. The agent automatically plans, decides what steps to perform, and accomplishes the task through dynamic reasoning.

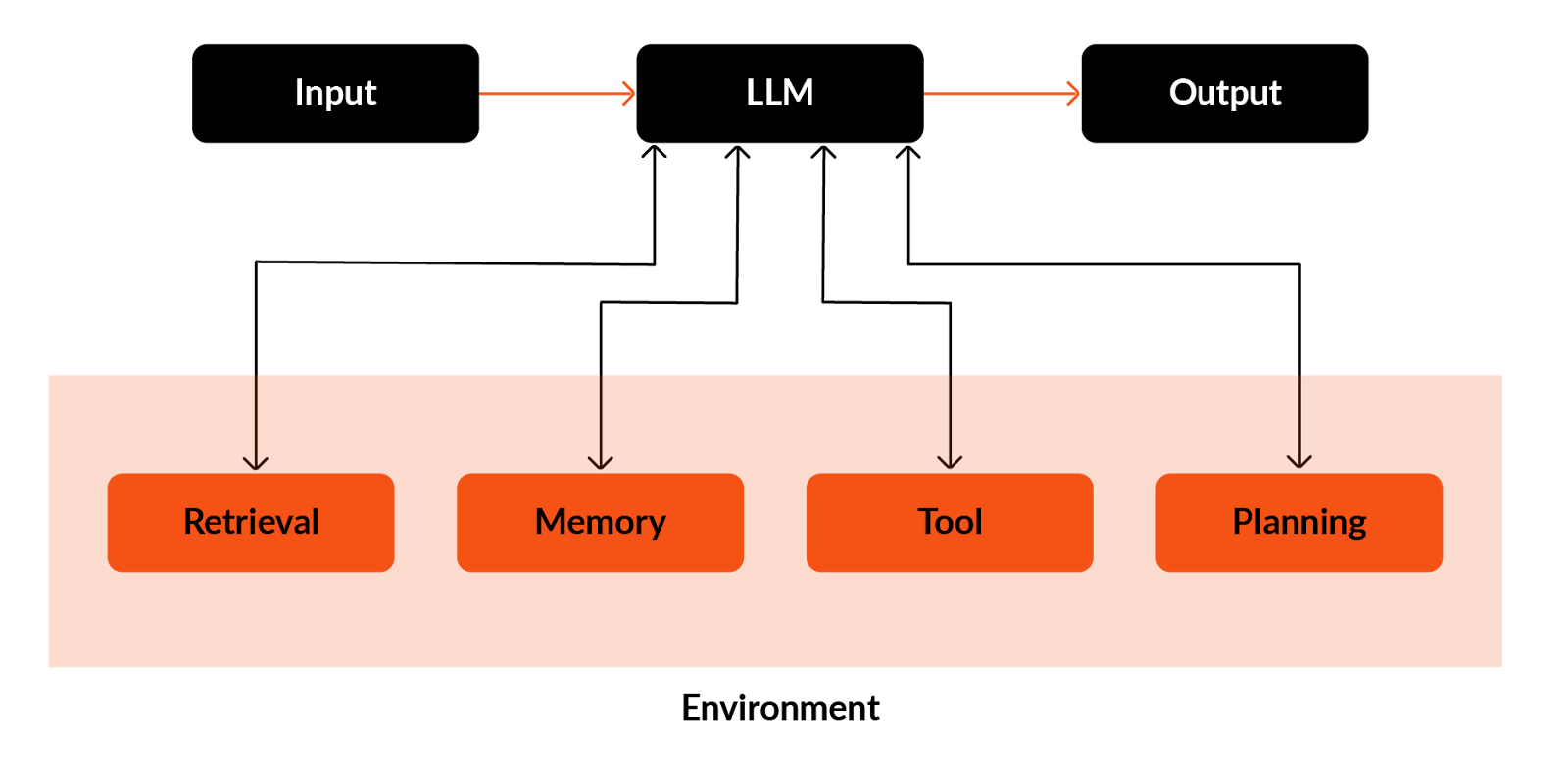

AI agent architecture

AI agent architecture extends beyond simple LLM calls, incorporating memory to retain conversation context, tool access for external actions, dynamic planning capabilities, and retrieval systems for knowledge base validation. These components work together to create self-reflective and adaptive systems:

- Retrieval: Agents access knowledge bases through semantic or vector search when sources are stored in vector databases. This increases factual accuracy by providing updated information compared to initially trained knowledge bases, which typically contain general and older datasets.

- Memory: Unlike standard LLM calls that lack access to prior messages, agents maintain context across interactions. This memory enables tracking previous developments and decisions, reducing hallucinations and improving response coherence throughout multi-step processes.

- Tool calling: This capability mirrors human problem-solving approaches, such as performing web searches, accessing SQL databases, generating images, or using Python for code execution. Tools are defined during agent initialization with clear descriptions of functionality and appropriate use cases.

- Planning: Planning works with memory to continuously update strategies based on observed outcomes. Agents can adjust their approach mid-process by analyzing errors, changing execution routes, and incorporating new information from web searches or documentation.

The importance of context engineering

The components described above enable agents to adapt and respond dynamically as they work toward their goals. However, they come with a significant trade-off: Each component adds information to the agent’s context window, creating potential challenges for memory management.

As context length grows, agents may struggle to process all available information effectively. Instead of reviewing the complete context, an agent might focus on only a portion, perhaps 60%, before making decisions or starting its planning process. This selective attention can lead to missed details or inconsistent responses.

Effective context engineering is critical to addressing these challenges. Developers must structure information clearly, providing only the necessary details for each specific task while storing additional context in metadata or knowledge bases. This approach maintains a manageable active context while ensuring that agents can access comprehensive information when needed.

What are AI agent tools, and how do they work with LLMs?

AI agent tools are systems that enable agents to interact with external environments, databases, or services through data inputs or API connections. The tools let agents gather and process additional information during task execution, providing them with more accurate and contextually relevant outcomes.

Understanding how tool calling operates within AI agents requires examining the tool definition and invocation process. Tools and function calls are defined during agent creation with descriptions that specify their purpose and the triggering conditions. Agents must receive explicit instructions about when and how to use each available tool.

Tools can be categorized into two main types: prebuilt tools and custom tools. Let’s learn about the two types of tools through the popular AI agent framework – LangGraph.

Creating custom tools in LangGraph involves adding the @tool decorator to existing functions, marking them as agent-accessible capabilities. Consider a simple addition tool in LangGraph that processes two integers. The function includes a docstring that instructs the agent to use this tool when encountering two integer inputs requiring addition. The docstring serves as guidance for the agent, explaining what each tool accomplishes and when it should be activated.

@tool

def add(a: int, b: int) -> int:

"""Use this function when you have to add two integers.

Args:

a: First integer

b: Second integer

"""

return a + bThe second method is to utilize prebuilt tools like the web search tool, image generation tool, file reading tool, or running Python code and calculations using code interpreter tools. These are already being used for many use cases and can be easily integrated into your AI agent by defining them in your tools list. We will cover these tools in detail in this article.

@tool

def web_search(query: Annotated[str, "Search query"]) -> str:

"""Search the web for current information. Use this for recent events or real-time data."""

try:

response = openai_client.responses.create(

model="gpt-4o",

tools=[{"type": "web_search_preview", "search_context_size": "medium"}],

input=f"Search for: {query}"

)

if hasattr(response, 'output') and response.output:

for item in reversed(response.output):

if hasattr(item, 'content') and item.content:

for content in item.content:

if hasattr(content, 'text'):

return content.text

return f"Search completed for: {query}"

except Exception as e:

return f"Error: {str(e)}"After the tools have been defined, they must be enabled so that the AI agent can utilize them when needed. This is usually done when the agent is initialized.

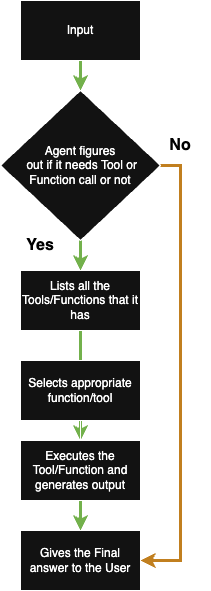

In the backend, when input is received, the agent first understands the intent and extracts key information. Then it lists all its tools and determines if any of the tools are required for the next move using the descriptions provided by the developer for each tool. It then executes the function/tool and generates the output.

For example, consider a loan approval application agent that must determine whether a loan should be provided to a couple based on their geographical location, financial situation, and past inquiries. To calculate the customer’s income, the husband’s and wife’s salaries must be added together. In such a case, the agent would utilize its add tool to calculate the total compensation of the couple. This illustrates how the agent utilizes its tool-calling ability to perform subtasks.

Exploring popular OpenAI prebuilt tools

OpenAI’s ready-made tools unlock new agent capabilities without the need for any bespoke integration work: You simply specify each tool’s type and settings when you initialize your agent. The sections that follow provide a detailed examination of these tools, complete with Python examples that demonstrate how to utilize them through the OpenAI API.

Note that while these are among the most popular tools across organizations, you’re free to swap in alternatives from Langchain, Anthropic, LangGraph, or CrewAI to suit your specific needs.

Web search

Use this to fetch current information from the Internet and include citations in your responses. The agent sends the query, the tool runs a live search, and the results become part of the agent’s context.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-5",

tools=[{"type": "web_search"}],

input="What was a positive news story from today?"

)

print(response.output_text)Code interpreter

Run Python code in a sandbox to perform calculations, data analysis, or generate plots. The agent creates code, executes it, and returns both results and any visual output references.

from openai import OpenAI

client = OpenAI()

instructions = """

You are a personal math tutor. When asked a math question,

write and run code using the python tool to answer the question.

"""

resp = client.responses.create(

model="gpt-4.1",

tools=[

{

"type": "code_interpreter",

"container": {"type": "auto"}

}

],

instructions=instructions,

input="I need to solve the equation 3x + 11 = 14. Can you help me?",

)

print(resp.output)File search

This tool lets the agent query your documents via the Responses API by performing semantic and keyword searches over uploaded files. The tool retrieves relevant document passages from your vector store, letting the agent reference proprietary content.

To use the tool, first create a vector store with the file API and upload PDFs, DOCX, TXT, or code files. The system automatically chunks each document, generates embeddings, and stores them in a searchable vector database. When the agent runs a file_search call with your vector_store_id, it retrieves and reranks the most relevant passages, thus enabling retrieval-augmented generation (RAG) on your proprietary content.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4.1",

input="What is deep research by OpenAI?",

tools=[{

"type": "file_search",

"vector_store_ids": ["<vector_store_id>"]

}]

)

print(response)Image generation

The image generation tool allows you to generate images using a text prompt and optional image inputs. It leverages the GPT image model and automatically optimizes text inputs for improved performance. The agent passes your prompt to the image model and returns a base64-encoded PNG.

from openai import OpenAI

import base64

client = OpenAI()

response = client.responses.create(

model="gpt-5",

input="Generate an image of grey tabby cat hugging an otter with an orange scarf",

tools=[{"type": "image_generation"}],

)

# Save the image to a file

image_data = [

output.result

for output in response.output

if output.type == "image_generation_call"

]

if image_data:

image_base64 = image_data[0]

with open("otter.png", "wb") as f:

f.write(base64.b64decode(image_base64))Computer-using agents

Computer-using agents (CUAs) take an input goal as a prompt and then take control of your system. They accomplish the requested task by taking screenshots of the screen and then using clicks and scrolls to perform tasks such as opening system apps or a web browser.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="computer-use-preview",

tools=[{

"type": "computer_use_preview",

"display_width": 1024,

"display_height": 768,

"environment": "browser" # other possible values: "mac", "windows", "ubuntu"

}],

input=[

{

"role": "user",

"content": [

{

"type": "input_text",

"text": "Check the latest OpenAI news on bing.com."

}

# {

# type: "input_image",

# image_url: f"data:image/png;base64,{screenshot_base64}"

# }

]

}

],

reasoning={

"summary": "concise",

},

truncation="auto"

)

print(response.output)Remote MCP

The Model Context Protocol (MCP) is an open-source standard framework developed by Anthropic and released in November 2024. It provides a unified JSON-RPC interface that enables AI agents to connect to any external system, including databases, APIs, or file stores, via standardized “servers.” By defining tools and resources once, agents can dynamically discover, authenticate, and invoke functions across platforms such as Shopify, GitHub, and Databricks, thereby eliminating the need for custom integrations and enabling seamless, mix-and-match workflows. In OpenAI, you can access multiple remote MCP servers from Shopify, Cloudflare, etc.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4o",

tools=[{

"type": "mcp",

"server_label": "shopify",

"server_url": "https://pitchskin.com/api/mcp"

}],

input="Add the Blemish Toner Pads to my cart"

)

print(response.output_text)Creating custom functions and using them as tools

You can also create custom functions and use them as tools that can be added to the agent workflow as needed. This is done using OpenAI’s function-calling ability. Here’s an example of a purchase order creator function.

functions = [

{

"name": "create_purchase_order",

"description": (

"Create a purchase order for approved items, supplier, quantities and delivery date."

),

"parameters": {

"type": "object",

"properties": {

"supplier": {

"type": "string",

"description": "Name of the supplier"

},

"items": {

"type": "array",

"items": {

"type": "object",

"properties": {

"item_id": {"type": "string"},

"description": {"type": "string"},

"quantity": {"type": "integer"},

"unit_price": {"type": "number"}

},

"required": ["item_id", "description", "quantity", "unit_price"]

},

"description": "List of items to order"

},

"delivery_date": {

"type": "string",

"description": "Expected delivery date in YYYY-MM-DD format"

},

"currency": {

"type": "string",

"description": "Currency code, e.g., USD, EUR"

}

},

"required": ["supplier", "items", "delivery_date", "currency"],

"additionalProperties": False

}

}

]Tutorial: A simple LangGraph-based tool calling agent in Python

This guide walks you through building a LangGraph-based AI agent that leverages OpenAI’s native web search and image generation tools. This tutorial creates a simple agent that can chat and browse the internet, retrieving results for factual queries. It can also generate images.

Note: To use Image generation models in OpenAI, you first need to verify your organization.

Step 1: Prepare the environment

To prepare the environment, you must first install the langgraph package along with other Python packages such as langchain, Python dotenv for accessing environment variables, and the requests library. This can be done using the pip install command in the terminal.

pip install langgraph python-dotenv requests openai langchainThen you can add your OpenAI keyin the command prompt, like this.

export OPENAI_API_KEY="your_key_here"Step 2: Import all necessary functions from the required packages.

We can import all the necessary functions from the packages that we had installed earlier and some built-in Python functions.

import os

from typing import Annotated

from dotenv import load_dotenv

from langgraph.prebuilt import create_react_agent

from langgraph.checkpoint.memory import MemorySaver

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langchain_core.messages import HumanMessage

from openai import OpenAI

load_dotenv()

openai_client = OpenAI()Step 3: Define the web search tool and the image generation tool

We will now define our web search and image generation tool using OpenAI’s Python SDK. We are using the GPT4o model for the web searching task and the DALL-E-3 model for the image generation part.

@tool

def web_search(query: Annotated[str, "Search query"]) -> str:

"""Search the web for current information. Use this for recent events or real-time data."""

try:

response = openai_client.responses.create(

model="gpt-4o",

tools=[{"type": "web_search_preview", "search_context_size": "medium"}],

input=f"Search for: {query}"

)

if hasattr(response, 'output') and response.output:

for item in reversed(response.output):

if hasattr(item, 'content') and item.content:

for content in item.content:

if hasattr(content, 'text'):

return content.text

return f"Search completed for: {query}"

except Exception as e:

return f"Error: {str(e)}"

@tool

def generate_image(

prompt: Annotated[str, "Image description"],

size: Annotated[str, "Size: 1024x1024, 1792x1024, or 1024x1792"] = "1024x1024"

) -> str:

"""Generate images using DALL-E. Use this when asked to create or generate images."""

try:

response = openai_client.images.generate(

model="dall-e-3",

prompt=prompt,

size=size,

quality="standard",

n=1

)

image_url = response.data[0].url

revised_prompt = getattr(response.data[0], 'revised_prompt', prompt)

# Download and save

img_response = requests.get(image_url, timeout=30)

if img_response.status_code == 200:

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

os.makedirs("generated_images", exist_ok=True)

filepath = f"generated_images/image_{timestamp}.png"

with open(filepath, 'wb') as f:

f.write(img_response.content)

return f"Image saved: {filepath}\nURL: {image_url}\nRevised: {revised_prompt}"

return f"Image generated: {image_url}\nRevised: {revised_prompt}"

except Exception as e:

return f"Error: {str(e)}"Step 4: Define the agent using Langgraphs pre-built React agents and then provide the necessary tools for the agent to use

We will now define a simple React agent that takes our tools and uses them based on the query the user provides. It utilizes the GPT-4o-mini model to comprehend the input query and then directs it to our tools. Keep in mind that this is a prebuilt agent by LangGraph that already supports tool calling, and we have used the bind tools functionality to bind custom tools. Since it is a simple task, we have created a small system prompt that instructs the agent to use the web search and image generation tool whenever the user requests it through a query. For a more complex task, it is crucial to create a clear, step-wise, and clean system prompt with proper output format instructions for the agent to generate an accurate output.

def create_agent():

"""Create LangGraph agent with web search and image generation."""

model = ChatOpenAI(model="gpt-4o-mini", temperature=0)

tools = [web_search, generate_image]

system_prompt = (

"You are a helpful AI assistant with web search and image generation tools.\n"

"ALWAYS use web_search for current information and recent events.\n"

"ALWAYS use generate_image when asked to create images.\n"

"Use tools proactively - don't rely solely on training data."

)

agent = create_react_agent(

model.bind_tools(tools, tool_choice="auto"),

tools,

prompt=system_prompt,

checkpointer=MemorySaver()

)

return agentStep 5: Invoke the agent

We need to define the thread ID and then invoke our agent with an input query, such as the one we have here.

def chat(agent, message: str, thread_id: str = "default"):

"""Send message to agent and get response."""

config = {"configurable": {"thread_id": thread_id}}

result = agent.invoke({"messages": [HumanMessage(content=message)]}, config)

return result["messages"][-1].contentStep 6: Test with an input query

Now we just need to add the input query that we want it to perform and use the tools appropriately.

if __name__ == "__main__":

agent = create_agent()

print("Agent initialized!\n")

# Example 1: Web search

print("="*60)

print("Example 1: Web Search")

print("="*60)

response = chat(agent, "What are the latest AI developments in November 2025?", "session_1")

print(f"Response: {response}\n")

# Example 2: Image generation

print("="*60)

print("Example 2: Image Generation")

print("="*60)

response = chat(agent, "Generate an image of a person blowing bubble ", "session_2")

print(f"Response: {response}\n")Outputs

Here are the outputs from the tutorial steps above.

============================================================

Example 1: Web Search

============================================================

Response: As of November 2025, several significant developments in artificial intelligence (AI) have emerged:

### International Collaborations and Policies

- **U.S. Technology Prosperity Deals**: The U.S. has formed "technology prosperity deals" with Japan and South Korea to enhance cooperation in AI, quantum computing, and other advanced technologies. These agreements aim to strengthen innovation ecosystems and coordinate exports, focusing on research security and resilient supply chains.

- **European Union's AI Act**: The EU's Artificial Intelligence Act, which became effective in August 2024, continues to shape AI regulation within the EU, providing a legal framework for AI with provisions gradually becoming operational.

### Advancements in AI Models and Tools

- **OpenAI's GPT-5 Release**: OpenAI launched GPT-5, featuring a dynamic router that optimizes responses based on the complexity of tasks, achieving high performance across various domains.

- **Google DeepMind's CodeMender**: This new AI tool detects and repairs software vulnerabilities proactively, utilizing advanced techniques like fuzzing and static analysis.

- **Z.ai's GLM 4.6**: The Chinese AI company released this model, which offers enhanced performance through advanced quantization techniques.

### AI Infrastructure and Investment

- **AI Infrastructure Expansion**: Global spending on AI infrastructure is projected to reach $3–$4 trillion by 2030, with major firms expected to invest significantly in 2025.

- **Anthropic's Growth**: The AI firm is projected to see a substantial increase in revenue, with a valuation surge following a major funding round.

### Emerging AI Technologies

- **Model Context Protocol (MCP) Servers**: These servers are becoming foundational for scalable, context-aware AI systems, enabling real-time decision-making across various environments.

- **DeepMind's AlphaEvolve**: This evolutionary coding agent has made algorithmic discoveries and developed new heuristics for optimizing data center scheduling.

These developments highlight the rapid progress in AI, encompassing international collaboration, regulatory frameworks, model advancements, infrastructure investment, and emerging technologies.

2.

============================================================

Example 2: Image Generation

============================================================

Response: Here is the image of a person wearing a mask in an urban environment:

The image shows a young woman standing outdoors on a city street, smiling warmly at the camera.

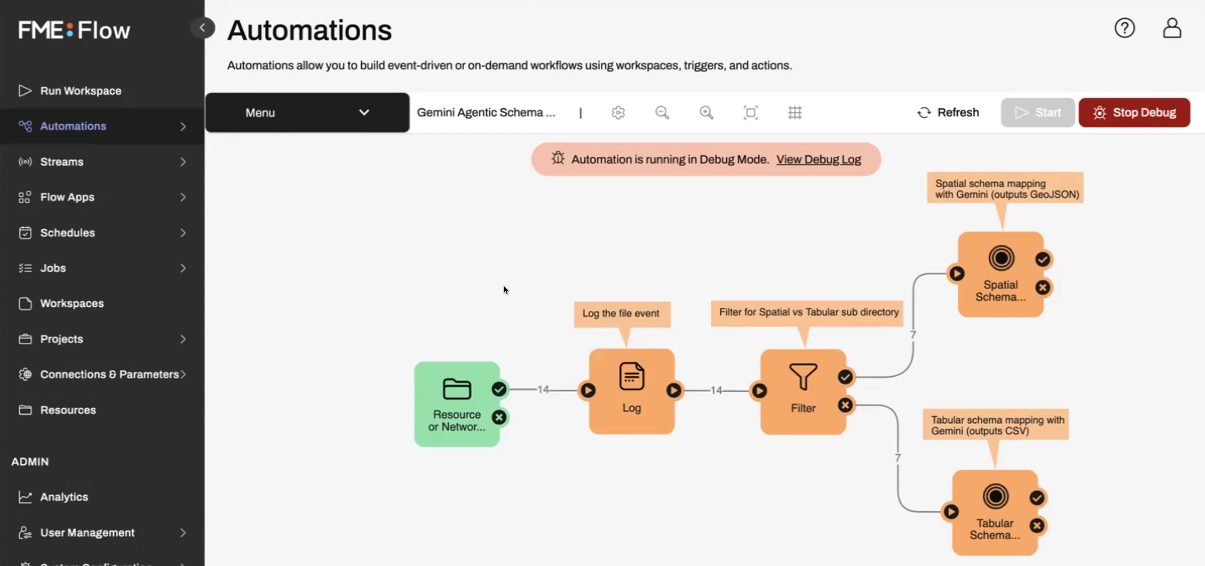

Leveraging FME by Safe Software to build data pipelines and agentic systems

Safe Software’s FME (formerly called the Feature Manipulation Engine) is a no-code, all-data, any-platform application where users can build data pipelines using AI agents to ingest structured or unstructured data from multiple schemas and data types into standardized formats. It helps users build automated workflows using AI agents hosted on leading cloud platforms such as Azure, GCP, and AWS.

FME has two solutions for building data workflows: FME Form and FME Flow.

FME Form

FME provides a no-code environment for users to build automated data workflows. It offers options to add multiple agents and seamlessly connect outputs from one block to another.

FME Flow

This area of functionality allows users to deploy their completed workflows and bring the solution live. It also handles CI/CD of data workflows or applications.

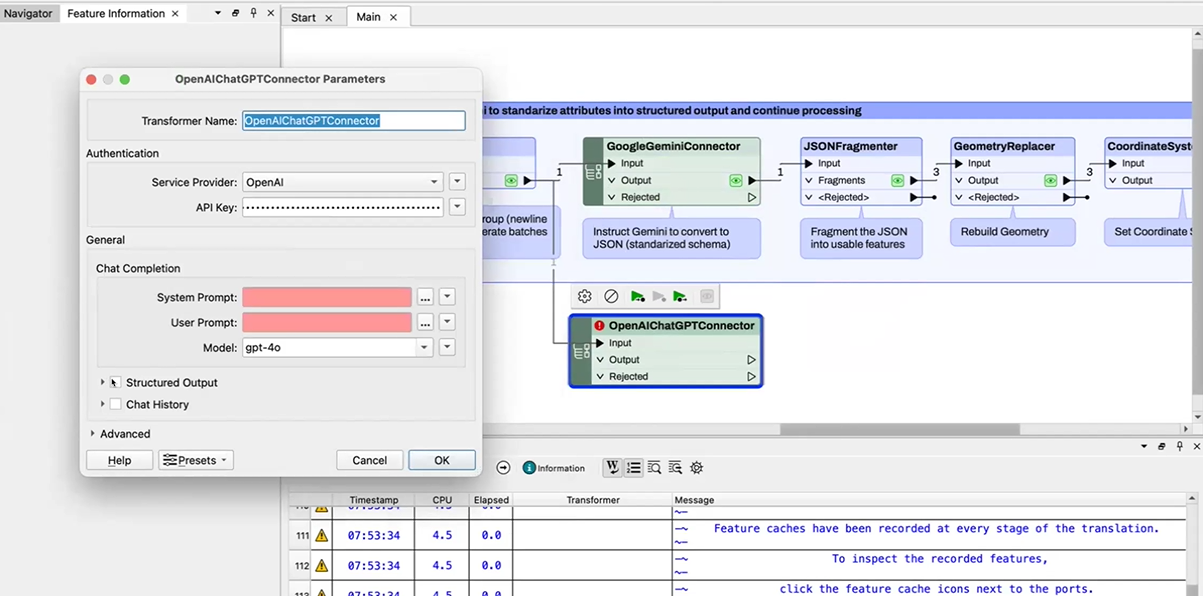

Tutorial: Creating a simple agent using FME

Let’s explore how we can build a simple agent in FME using OpenAI Image generation capabilities.

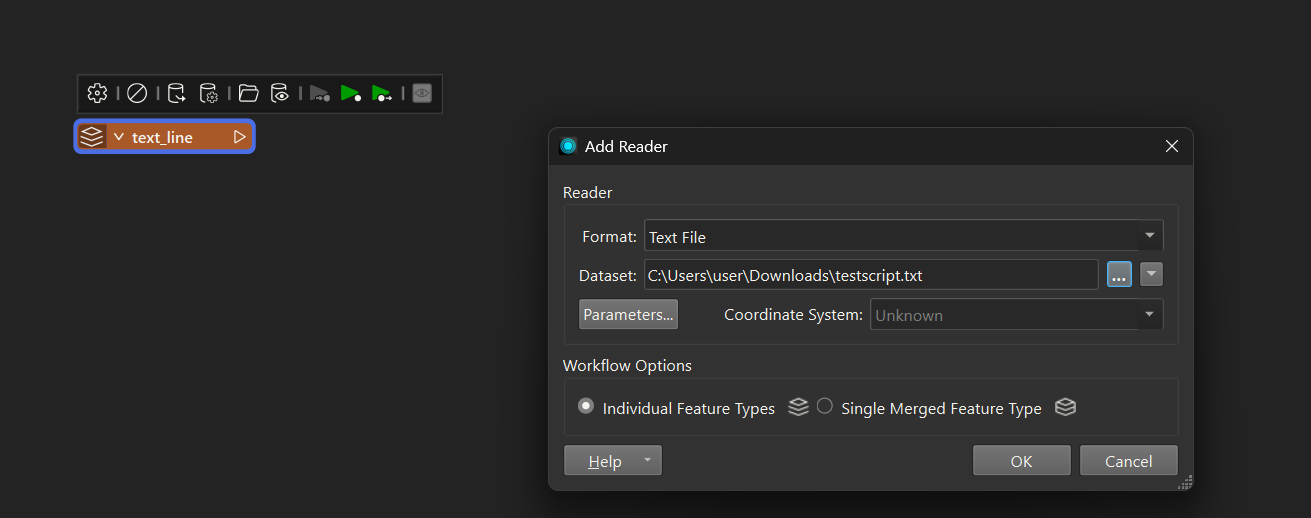

- From the FME workbench, select the Reader icon and select Text as the format. This will enable taking input from a text file.

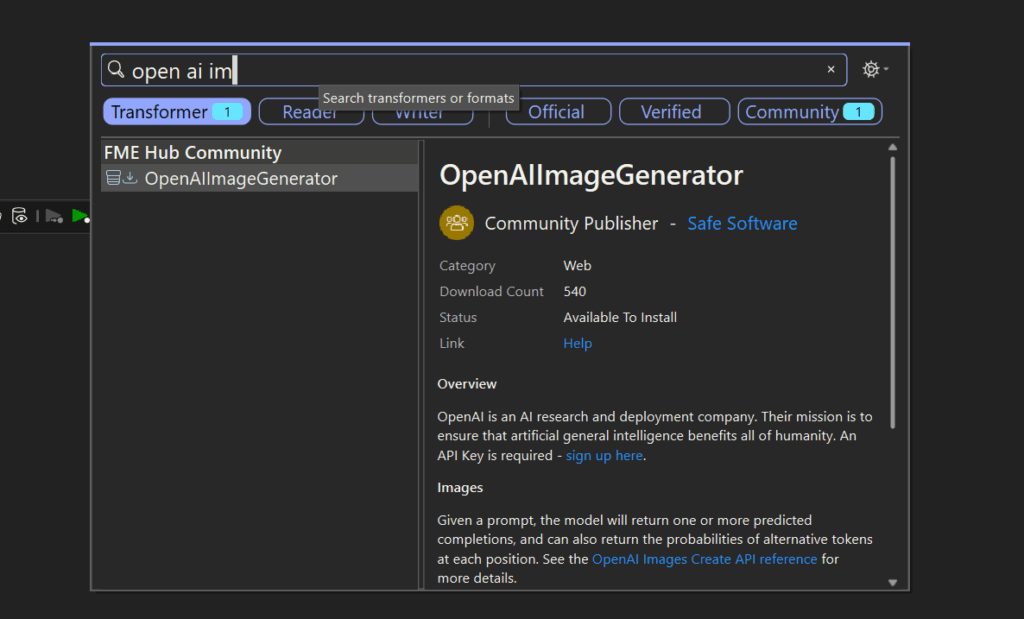

- From the Transformers section of FME, search for OpenAIImageGenerator.

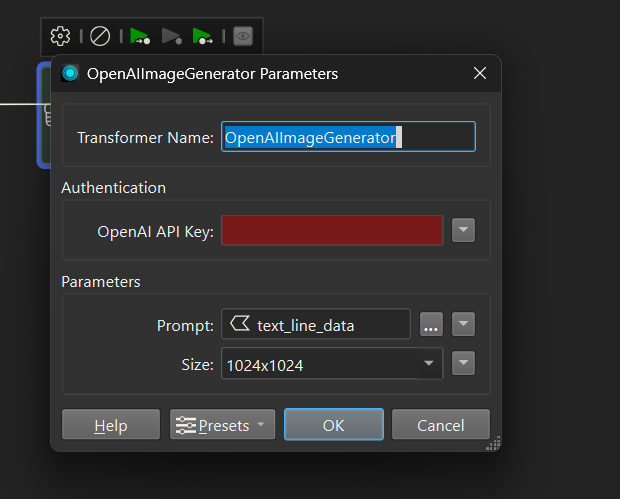

- Configure your OpenAI Key and select the output from the TextInput as the prompt.

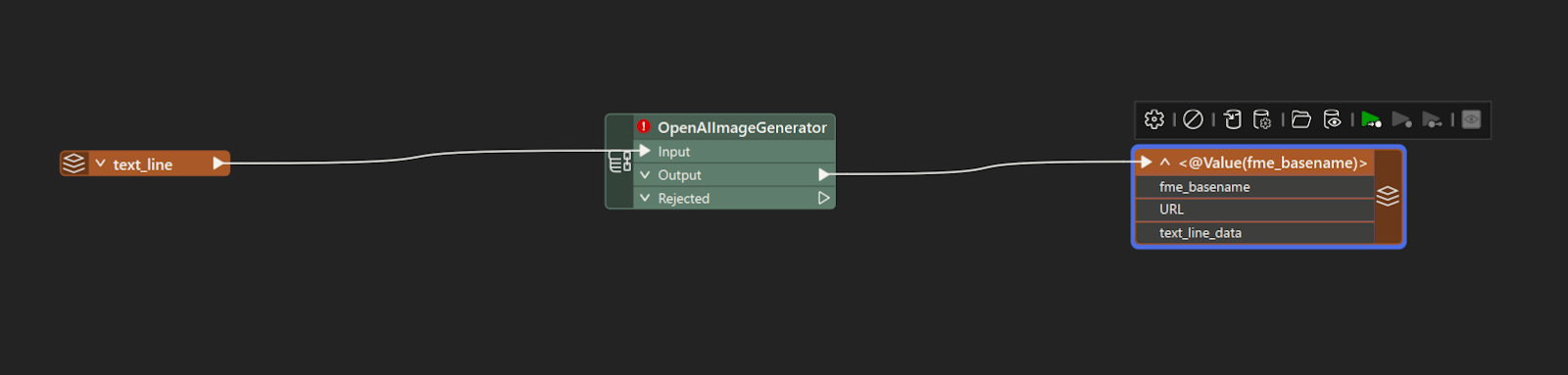

- Create a Writer instance and connect it to the output of OpenAIImageGenerator transformer.

The final pipeline will look like this:

As you can see, creating an agent utilising OpenAIImage generation is as easy as a few drags and drops in FME.

Best practices for building tool-calling agents

Building production-ready AI agents with tool-calling capabilities requires striking a balance between automation and control, security and functionality, and flexibility and reliability. Organizations implementing these systems encounter common challenges related to tool selection, authentication, database safety, and output consistency that can hamper projects if not addressed early.

In this section, we cover several best practices and key pointers to remember when building production-grade agents with tool-calling capabilities.

Provide clear tool definitions when adding tools to your AI agents

Tool clarity directly impacts agent reliability and determines whether your system succeeds or fails in production. Poorly defined tools lead to incorrect selections, API failures, and frustrated users. The challenge lies in providing enough context for correct decision-making without overwhelming the agent with unnecessary information.

For example, let’s consider a legal contract use case and assume that the agent needs tools for five different tasks: extracting key clauses, assessing risk factors, validating compliance requirements, and generating summaries. It is essential to define each tool clearly. Each tool serves a specific purpose and requires different data inputs. At the same time, when building such tools, a user needs to define clear boundaries on when, what, and where the tool should be used. Otherwise, an agent with poorly defined tools might attempt to use a risk assessment tool for clause extraction, leading to API errors and confused responses.

Navigating the constraints around context length is critical while working with AI agents. While working with larger documents or descriptions, the user needs to carefully send sections that are relevant to a particular tool rather than the entire content. For example, if the relevant information is mostly located towards the end of the document, then one should only send the last few pages as context for the agent, rather than the entire document. If not, then this might lead to more confusion and decrease the accuracy of the agent.

Create fallback mechanisms with proper logging

Agents require structured decision-making frameworks to select suitable tools based on context, user intent, and available data. Without systematic guidance, agents either default to familiar tools repeatedly or make random selections that break workflows.

It is essential to establish fallback mechanisms when tool calls fail, whether that’s due to API timeouts, invalid parameters, or service unavailability. Some of the most commonly used methods include retrying with adjusted parameters, switching to alternative tools that achieve similar objectives, or escalating to human oversight by triggering a human-in-the-loop workflow.

Since agents have memory and can store every action that they have performed, it is essential to add logging sentences between each tool call. This allows agents to know what has been done, what output was generated, whether it matches the expected output, and what needs to be done as the next step. System prompts should be designed to clearly define routing logic and explicitly mention the tool’s calling ability, providing guidelines on how to use it and when to use it.

Give restricted access to the AI agents for sensitive information

When building AI agents, you must ensure that the agent does not have access to documents or databases containing sensitive information or client-specific configurations that should never be altered. For example, suppose you are using a SQL database to store client information and other details. In that case, it is a good practice to provide only read access to the agents and not write access, so that any records in the database cannot be changed automatically during a task. If at all you are using an agent that can make changes to a database, it should be designed with a human-in-the-loop strategy. Agents must only be able to suggest changes, and a human must review them and make changes. Similarly, there should be guardrails to ensure that none of the restricted files or contexts that are sensitive or contain PII entities are passed as context to AI agents.

Standardize output formats for reliable downstream processing

Inconsistent tool outputs create integration challenges and debugging nightmares throughout agent workflows. When one tool returns string data while another provides structured objects, downstream systems struggle to process results reliably. Standardization enables automated workflows, simplifies error handling, and supports system evolution as requirements change.

It is essential to adhere to a specific standard output format type throughout each step, ensuring consistent parsing of those outputs across the code. The most commonly used output formats are JSON and YAML; many language models now support JSON and YAML outputs, which can be easily parsed later using the key-value format. Adding different output formats from each tool requires accurate reproducibility every time and the creation of separate parsers to extract information from each output, which can lead to more issues.

There should also be custom-made retry functions created at each API call step so that they can be triggered whenever the output appears inaccurate or the parser fails to extract entities from that output.

Implement different context engineering strategies to minimize hallucinations

Context management separates functional demos from production-ready systems. Agents must maintain conversation state, track task progress, handle interruptions gracefully, and recover from failures while keeping context windows within manageable limits. Poor context engineering leads to hallucinations, inconsistent responses, and system instability under real-world conditions.

It is essential to develop custom API call-wise context length minimization functions that ensure, at each API call, the presence of only the context relevant to the query, thereby avoiding unnecessary information that can lead to hallucination or a drop in accuracy.

One more strategy to maintain the correct context length is to store the context in JSON format, with key-value pairs for all API calls. Then access each one by filtering it through the API call ID and using it in the API call’s user message.

Use humans in the loop wherever critical decision-making is required

As discussed earlier, any authorization, database update, or decision that can impact the operational cost of the product should always be routed to a human for approval. There have been numerous instances where agents have entered a loop and suddenly provided irrelevant answers. To avoid such scenarios, it is best to create custom functions that can trigger human intervention whenever there is a cost spike or a drop in accuracy.

The human-in-the-loop approach should always be used until the model has seen all the scenarios that can occur in a specific app or product. Then, based on the scenarios and feedback, you can later fine-tune the language model with that feedback and labels to address future scenarios.

Last thoughts

Building AI agents with tool-calling capabilities is not just about giving language models more power; it’s about giving them access to tools that let humans solve complex problems. We’re transitioning from simple question-answering systems to intelligent assistants that can reason, make decisions, and take action. But the real magic lies in how meaningfully those tools come together to solve real problems.

In many ways, building such agents is like leading a well-coordinated team: each tool has a defined role, clear boundaries, and a shared goal. The developer’s job is to create a balance between automation and control, speed and safety, innovation and responsibility.

If you strike this balance correctly, tool-calling agents can truly transform how you work, enabling businesses to make faster decisions with access to domain-specific documents, databases, integrations, and more, thereby improving customer experiences and reducing repetitive effort. However, even as these systems become more sophisticated, it’s essential to remember that human judgment remains crucial. AI should assist, not replace. Humans are still needed to validate whether the agents are performing tasks correctly, with correct judgment and appropriate tool selection.

Continue reading this series

AI Agent Architecture: Tutorial & Examples

Learn the key components and architectural concepts behind AI agents, including LLMs, memory, functions, and routing, as well as best practices for implementation.

AI Agentic Workflows: Tutorial & Best Practices

Learn about the key design patterns for building AI agents and agentic workflows, and the best practices for building them using code-based frameworks and no-code platforms.

AI Agent Routing: Tutorial & Examples

Learn about the crucial role of AI agent routing in designing a scalable, extensible, and cost-effective AI system using various design patterns and best practices.

AI Agent Development: Tutorial & Best Practices

Learn about the development and significance of AI agents, using large language models to steer autonomous systems towards specific goals.

AI Agent Platform: Tutorial & Must-Have Features

Learn how AI agents, powered by LLMs, can perform tasks independently and how to choose the right platform for your needs.

AI Agent Use Cases

Learn the basics of implementing AI agents with agentic frameworks and how they revolutionize industries through autonomous decision-making and intelligent systems.

AI Agent Tools: Tutorial & Example

Learn about the capabilities and best practices for implementing tool-calling AI agents, including a Python-based LangGraph example and leveraging FME by Safe for no-code solutions.

AI Agent Examples

Learn about the core architecture and functionality of AI agents, including their key components and real-world examples, to understand how they can complete tasks autonomously.

No Code AI Agent Builder

Learn the benefits and limitations of no-code AI agent builders and how they democratize AI adoption for businesses, as well as the key components and features of these platforms.

Multi-Agent Systems: Implementation Best Practices

Learn about multi-agent systems and how they improve upon single-agent workflows in handling complex tasks with specialised roles, communication, coordination, and orchestration.

Langgraph Alternatives: The Top 6 Choices

Learn about LangGraph, a powerful yet complex orchestration framework for building intelligent systems, and its limitations, alternatives, and selection criteria.

Agentic AI vs Generative AI

Learn the differences between generative AI and agentic AI and how to choose the right AI paradigm for your needs.