AI Agent Platform: Tutorial & Must-Have Features

AI agents are intelligent autonomous systems that can execute tasks on behalf of a user or another system. They are designed to learn, reason, and perform tasks independently, adapting to custom preferences or needs.

Powered by LLMs, AI agents employ an architecture that involves numerous components, including vector databases, short-term memory, long-term memory, and reasoning loops. Hence, developing an AI agent from scratch requires considerable engineering expertise.

AI agent platforms address this problem by providing an intuitive interface with pre-built functionalities. You can build and maintain AI agents, manage workflows, and integrate external systems. There are many agentic platforms, each with pros and cons, so choosing one might be confusing.

This article explores key AI agent capabilities and suggests critical factors to consider when selecting the best fit for your needs.

Summary of key AI agent platform concepts

| Concept | Description |

|---|---|

| AI agents | AI agents are intelligent systems that can reason and execute autonomous tasks on behalf of users or other systems. |

| Agentic workflows | Agentic workflows are logic sequences that orchestrate how AI agents reason, act, and integrate with tools, APIs, or function calls to achieve a specific goal. |

| AI agent platforms | AI agent platforms provide tools and libraries for designing, implementing, and monitoring AI agents and workflows. |

| Flexibility vs. control | Define clear boundaries for autonomy to strike a balance between flexibility and control. • Decision-making flexibility enables greater creativity and adaptability, but can result in unpredictable outcomes. • Too much control restrains agents from learning and working efficiently. |

| No code development | No-code or low-code infrastructure supports complex multi-agent integration without writing code. |

| Support for LLMs | Support for popular large language models (LLMs), such as GPT, Claude, Mistral, Llama, and Gemini, allows users to choose based on their specific requirements. |

| Ease of data integration through APIs | AI agents and workflows rely on external APIs and function calls to execute tasks. AI agent platforms must have comprehensive integration support for third-party APIs and function calls. |

Understanding AI agents

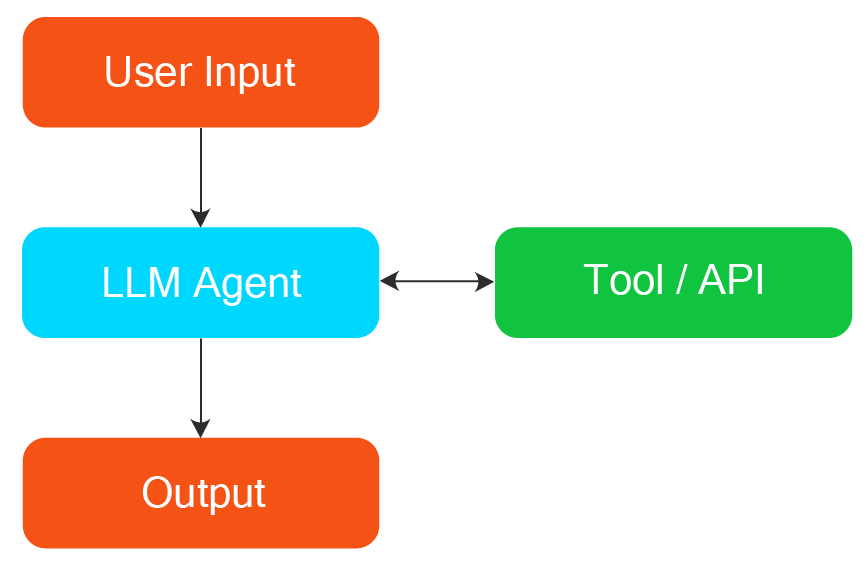

AI agents are autonomous programs powered by large language models (LLMs) that can interact with tools, APIs, or environments to achieve a specific goal. In contrast to traditional software routines, AI agents exhibit reasoning, retain memory, and show autonomy in decision-making.

Agentic workflow

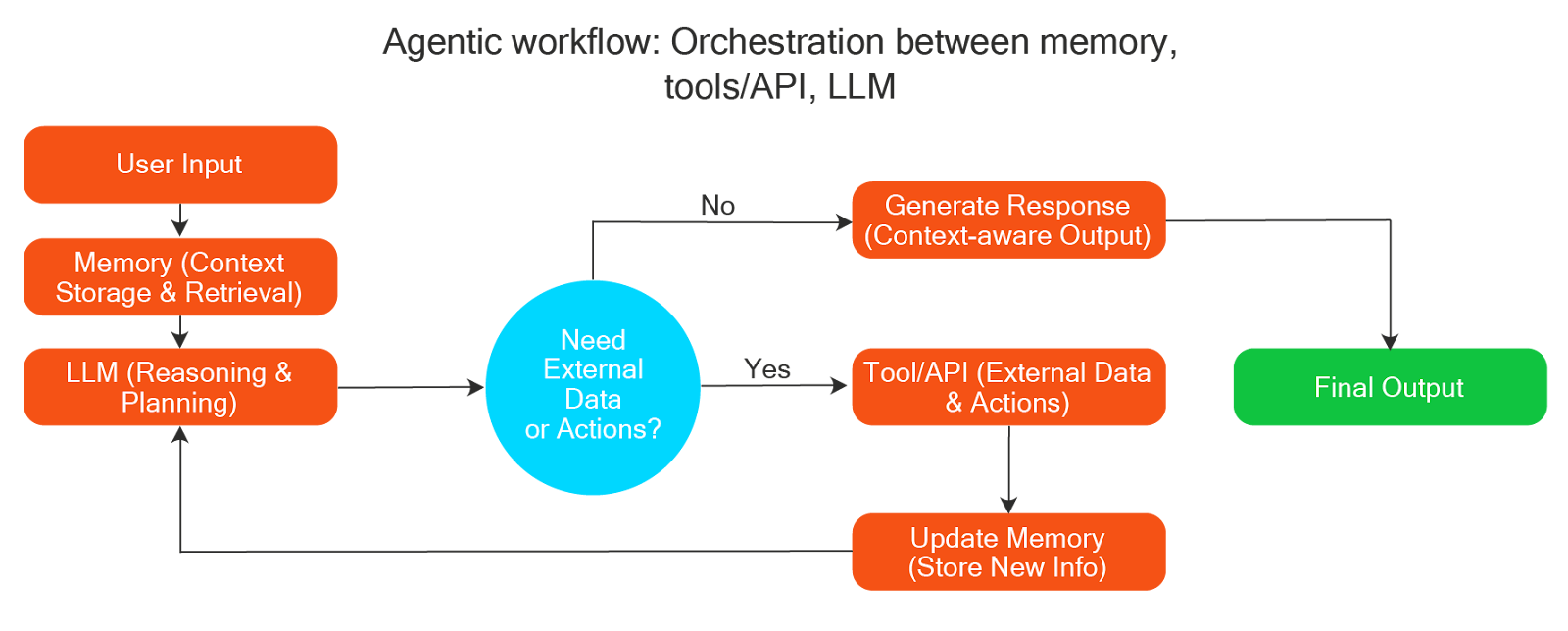

Agentic workflow breaks down tasks into smaller subtasks, often utilizing predefined workflow patterns such as prompt chaining, routing, and parallelization. It requires orchestrating multiple components, LLMs, tools, APIs, and memory to complete a task.

The agentic workflow explicitly controls each process stage by deciding when to call tools, store or retrieve memory, or pass subtasks to an LLM. The LLMs are only responsible for focused, well-defined subtasks, not the overall decision-making. The structured setup reduces outcome unpredictability and makes anticipating and reproducing the system’s behaviour easier.

Below is an example of a workflow using a single agent orchestrating memory and a decision block for routing to an external tool or generating an output.

How an AI agent platform helps build AI agents

AI agent platforms offer high-level abstractions and building blocks for easily designing, deploying, and monitoring AI agents.

LLM call abstraction

At its core, an AI agent relies on its ability to interact with an LLM directly through raw API calls, which can be tedious, error-prone, and repetitive. AI agent platforms provide higher-level abstractions that simplify and standardize this process. For example, they provide:

- A unified interface for multi-model support, as LLM APIs differ in structure across providers

- Abstractions for rate limits or timeouts with built-in retry logic, exception handling, and graceful error reporting

- Abstractions for multi-agent collaboration where specialized agents independently handle subtasks but coordinate through shared context and workflows

- Reasoning graph building blocks for hierarchical and sequential task flows

- Prompt template capabilities to minimize hardcoding prompts

These features support consistency, facilitate easier debugging, and enable reuse. Developers can switch between models as needed, building agents more efficiently and reliably.

Short and long-term context management

AI agents require memory to maintain context and continuity, as well as to learn from past interactions. AI agent platforms introduce structured memory systems to manage past inputs and other information.

Short-term memory

Short-term memory retains the history of the recent conversation and the actions taken by the agent. It maintains the flow in multi-turn conversations, referring back to or clarifying prior context.

Since LLMs have an input token limit, platforms apply techniques to effectively maintain recent history, such as:

- Keeping the most recent N turns in memory

- Summarizing earlier conversations

- Structuring messages by role to maintain clarity

Long-term memory

Long-term memory generally includes user preferences and goals, factual knowledge, past conversations, and domain-specific data. Platforms implement long-term memory using external storage systems, such as vector databases, relational databases, document databases, and knowledge graphs. When an agent needs information from long-term memory, it uses a retrieval mechanism to query relevant content.

Built-in decision-making patterns

The most essential part of any agent is the decision flow that enables it to reason about the task, break it down into manageable subtasks, and execute them to reach its goal. AI agent platforms provide built-in implementations of several decision-making patterns.

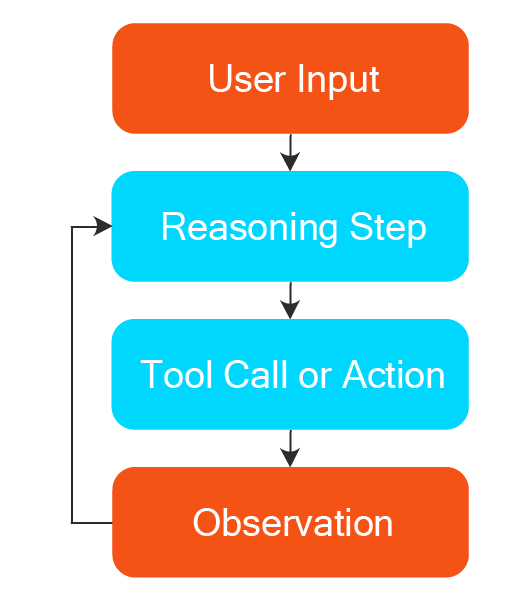

ReACT (Reason, Act, and Observe) is one such typical decision-making pattern widely used for building autonomous agents. ReACT loops enable agents to think before they act. They iteratively reason about the current state, determine the following action, execute it, and observe the result.

This loop continues until a final response or decision is made. It reflects how agents alternate between thinking and acting.

For example, when answering the question “What is the weather in London and do I need an umbrella?”, the agent follows this loop:

- Reason: I need to get the current weather of London to answer this question.

- Act: Call a weather API for London.

- Observe: The API returns “light rain”.

- Reason: Since it is raining, the user might need an umbrella.

- Act: Respond to the user’s question, suggesting taking the umbrella

Function and API integration

Agents must interact with the outside world while making and executing decisions. This integration with the outside world requires accessing functions and API exposed by third-party services. AI agent platforms provide built-in support for functions and API integration, like:

- Bing, Google, or other search APIS

- Weather and location-specific APIs are OpenWeather and the Google Maps API

- Calendar, calculator, email, and other tool APIs

Agent evaluation and monitoring

AI agents generate variable responses as they follow dynamic reasoning paths and interact with external tools, which introduces challenges to ensuring reliability, safety, and performance. AI agent platforms address this by offering structured evaluation tools and real-time monitoring capabilities.

Monitoring

Just like any observability system, the AI agent platform’s monitoring modules can log agent actions in terms of trace and span to provide visibility into agent decisions.

A trace captures the entire execution journey of an agent as it works through a task. It provides a step-by-step record from the initial input to the final output, helping understand what the agent did, in what order, and why.

A span represents each step in the trace. Spans help isolate specific actions, such as calling an external tool or retrieving memory, making it easier to identify which part of the process is underperforming or failing.

The AI agent platform also offers dashboards or APIs for tracking performance metrics, which include:

- Latency: The time it takes for the agent to complete a task

- Cost: Compute the cost associated with each interaction with the agent

- Request error: Request failure, such as tool call failure, timeout, or invalid responses

- Accuracy: Whether the agent completed the task correctly or returned an appropriate response

- User feedback, including ratings, likes, dislikes, and comments, provides valuable insights into the agent’s performance

Evaluation

AI Agent platforms also help assess how well the agent completes the task. Standard evaluation tests by agent platforms are:

- Prompt-response test: Compares agent performance on a test set against the ground truth to assess correctness

- Tool usage validation: Checks whether the agent calls the correct tools with the proper parameters

- Human-in-the-loop: Allows human reviewers to rate or annotate outputs, especially for open-ended responses

Popular AI agent platforms

AI agent platforms can be categorized into two types: code-based platforms and no-code/low-code platforms. No code platforms try to strike a balance of flexibility and ease of implementation.

Code-based implementation

Code-based platforms require knowledge of programming languages, such as Python, and an understanding of the SDKs provided by the agent platforms. They provide high flexibility during implementation, but at the cost of requiring higher engineering depth and a steeper learning curve. A few of the popular platforms are:

CrewAI

CrewAI is a multi-agent framework with a clear role, structure, and memory for each agent. It offers two workflows: sequential and hierarchical. A sequential process executes tasks in a predefined order, whereas a hierarchical process has a manager agent that coordinates with the crew and delegates tasks to other agents.

Autogen by Microsoft

AutoGen enables the building of multi-agent systems that can converse with each other and collaborate to solve tasks. Autogen treats agents as conversational entities, such as assistants or user proxies, which interact with each other and with external tools or humans.

LangGraph

LangGraph provides a graph-based workflow for implementing agents. It maintains a shared agent state across the graph, allowing memory and context to carry over the workflow. Langraph can build complex and non-linear AI agents with predefined sequential logic embedded within them.

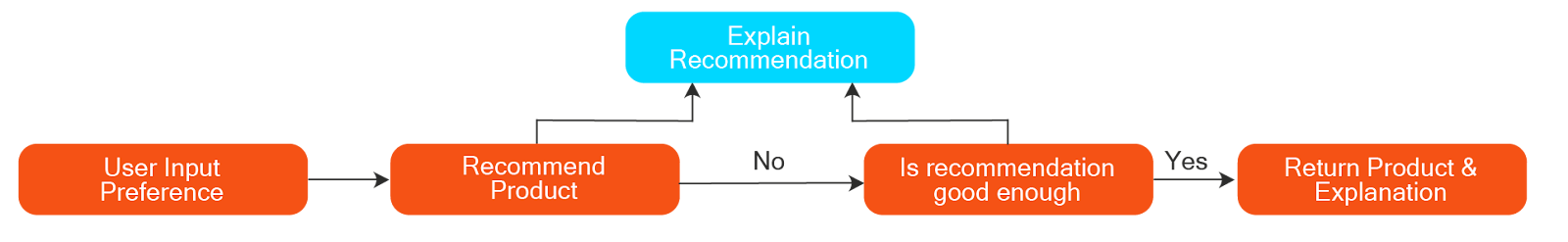

Let’s use LangGraph to build a functional agent for recommending products based on user preferences. The agent takes in a phrase that describes a user’s preference, generates a recommendation for the user, and checks the quality of the recommendation by providing explanations for its output.

Our code example uses LangGraph nodes, states, and edges to create the workflow. The state evolves as the workflow progresses till the task is completed. The agent re-routes to finding the recommended product if the best match is not found.

from langgraph.graph import StateGraph, END

from typing import TypedDict

# Define shared state

class RecommendationState(TypedDict):

preference: str

product: str

explanation: str

is_match: str

from langchain_openai import ChatOpenAI

model = ChatOpenAI(model="gpt-4o", temperature=0 , max_retries = 2)

# Simulated LLM function (replace with actual LLM call)

def llm(prompt: str) -> str:

output = model.invoke(prompt)

return output

# Node 1: Recommended Product

def recommend_product(state: RecommendationState) -> RecommendationState:

state['product'] = llm(f"Recommend a product for someone who likes: {state['preference']}")

return state

# Node 2: Explain Recommendation

def explain_recommendation(state: RecommendationState) -> RecommendationState:

state['explanation'] = llm(f"Explain why '{state['product']}' is a good match for someone who likes: {state['preference']}")

return state

# Node 3: Updates state: Using LLM, check if the recommendation is good enough and update the is_match state. Use your own quality check logic here if needed.

def check_quality_node(state: RecommendationState) -> RecommendationState:

is_match = llm(f"Answer Yes if '{state['product']}' is a relevant recommendation for '{state['preference']}' otherwise answer as No")

if 'yes' in is_match.content.lower():

state['is_match'] = 'Yes'

return state

# Routing function: returns next node name or END based on state

def check_quality_router(state: RecommendationState) -> str:

if state['is_match'] != 'Yes':

return "recommend_node"

return END

# Create graph as presented in the diagram

graph = StateGraph(RecommendationState)

# Add nodes

graph.add_node("recommend_node", recommend_product)

graph.add_node("explain_node", explain_recommendation)

graph.add_node("check_node", check_quality_node) # Node updates state

# Add conditional edges with routing function separately

graph.add_conditional_edges("check_node", check_quality_router)

# Set entry point and connect edges

graph.set_entry_point("recommend_node")

graph.add_edge("recommend_node", "explain_node")

graph.add_edge("explain_node", "check_node")

# Compile and run

recommendation_agent = graph.compile()

# Run with initial state

initial_state = RecommendationState(

preference="outdoor adventures and hiking",

product="",

explanation="",

is_match='No'

)

result = recommendation_agent.invoke(initial_state)

# Output

print("\nFinal Product:", result['product'])

"Final Product: content='For someone who enjoys outdoor adventures and hiking, a versatile and high-quality product

to consider is the **Garmin GPSMAP 66i**. This device combines a reliable GPS navigator with inReach satellite communication

technology, making it perfect for outdoor enthusiasts who venture into remote areas. It offers detailed topographic maps,

# weather forecasts, and the ability to send and receive messages or trigger an SOS in emergencies, even when out of cell phone range.

This ensures both safety and convenience during hiking trips. Additionally, its rugged design is built to withstand harsh

outdoor conditions, making it a durable companion for any adventure.'"

print("Explanation:", result['explanation'])

"Explanation: content='The content provided is a good match for someone who enjoys outdoor adventures and hiking for

several reasons:\n\n1. **Versatile and High-Quality Product**: The Garmin GPSMAP 66i is described as a versatile and high-quality

device, which is important for outdoor enthusiasts who need reliable equipment.\n\n2. **GPS Navigation and Satellite Communication**:

The combination of GPS navigation and inReach satellite communication technology makes it ideal for those venturing into remote areas

where cell phone coverage may be unavailable. This feature is crucial for navigation and safety.\n\n3. **Detailed Topographic Maps

and Weather Forecasts**: Access to detailed maps and weather forecasts enhances the hiking experience by helping users plan their

routes and stay informed about weather conditions.\n\n4.'"

print("Is the recommended product relevant:", result['is_match'])

"Is the recommended product relevant: Yes"

The above code showcases an agent recommending a product. The execution process begins with initial input, specifying a given preference: “outdoor adventure and hiking.” At each node, the LLM is invoked to update the state according to the node’s functionality. At Node 3, a quality check is done using the state. If passed, a product is recommended; otherwise, the process is routed back to the recommended node.

No-code platforms

No-code platforms offer a visual interface and built-in templates, making AI agents accessible to everyone. They provide the following advantages.

- Lower barrier to entry: Users don’t need to write Python scripts or manage APIs. They can drag, drop, and configure components visually

- Faster prototyping and deployment: With prebuilt templates, modular components, and visual editors, users can quickly build and test AI agents

- Ease of use: No-code tools offer intuitive interfaces with guided flows, form-based configurations, and logical blocks for building logic

- Business-user friendly: They enable business users to build or customize AI agents directly, as no-code platforms abstract technical complexity

- Cost-effective: No-code platforms lower overall costs by reducing development time and eliminating the need for specialized engineers for every task

FME by Safe Software is a no-code platform that enables easy AI agent development through a visual, drag-and-drop interface for creating complex workflows. It’s especially strong when the agent needs to work with many different data sources or enterprise systems.

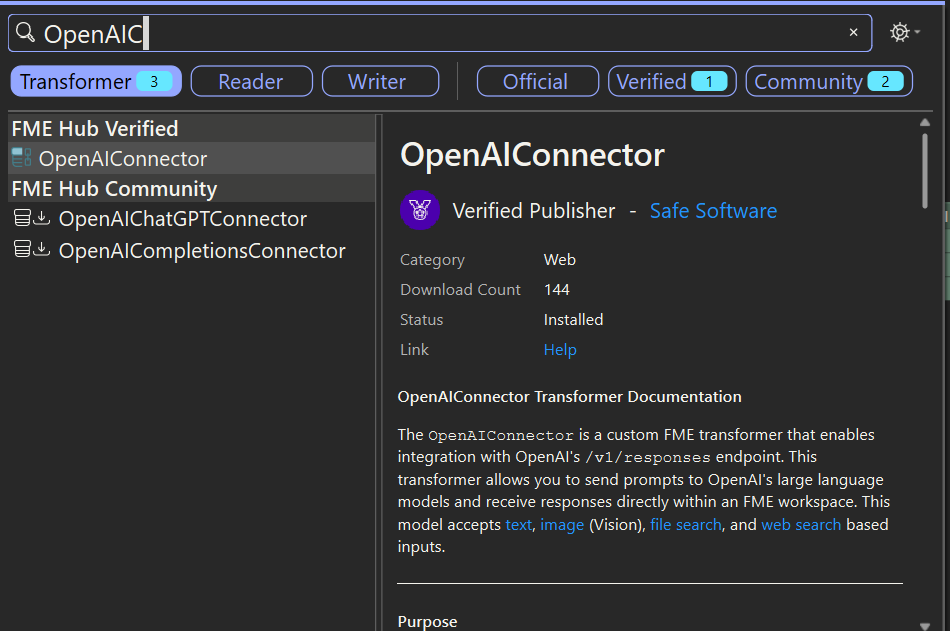

Let us try to implement the same workflow using FME Workflow. In a nutshell, this requires a user input block and three OpenAI calls from within FME. From with in the FME workbench, one can instantiate the OpenAIConnector as below.

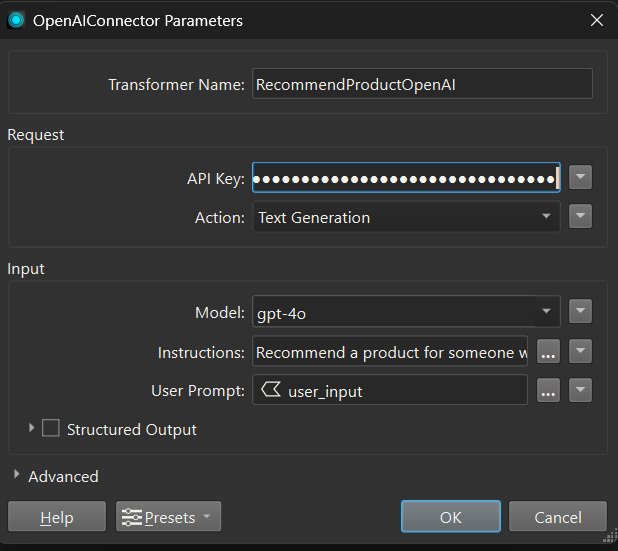

After initializing the OpenAI transformer block, one needs to configure the API key and prompt using the block’s settings pane.

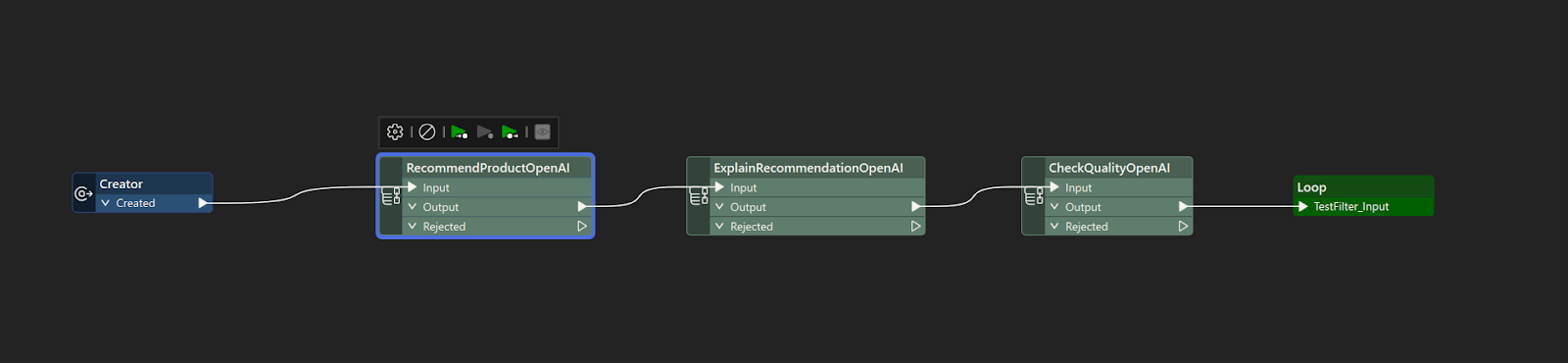

Our workflow will involve three such OpenAIConnector blocks, one each for recommending a product, explaining recommendations, and checking the quality of recommendations. The complete implementation will look as follows.

FME comes built in with a comprehensive list of transformers, making it easy to implement any data or AI integration workflow with just a few clicks. The full list of transformations supported by FME can be found here.

Key considerations when choosing an AI agent platform

As the ecosystem of AI agent platforms grows, selecting the right platform and using it effectively can make or break your project.

Before diving into platform comparisons, clearly define what the agent would solve. A clear objective sets the foundation for all your technical choices.

Different platforms are optimized for various kinds of agents. Select a platform that aligns with your use case, such as retrieval, automation, or chat. Decide whether you need LLMs, function calling, or API integration functionality. Adding too many features or tools without a clear purpose can overload your agent and make it harder to maintain.

Instead of building a complex agent upfront, start small and then iterate

- Create a minimum viable agent with just one or two core capabilities

- Test it in real-world scenarios or with mock users and evaluate the performance

- Expand on the agent’s capabilities by adding logic, tools, or prompts as you validate what works

Modern platforms support incremental development through the use of modular components, versioning, and testing sandboxes.

Here are the key factors to consider when selecting and working with an AI agent platform.

LLM and model abstraction

Your platform should facilitate integration with LLMs from various providers. This enables flexibility in choosing the right model. Model abstraction gives you flexibility in cost, performance, and control.

- A platform that supports various providers, such as OpenAI, Claude, and Mistral, allows users to compare and choose the best model

- A unified API or abstraction layer ensures you can swap models with minimal code changes and simplify experiments

- A platform should allow control over model parameters to affect model behaviour

API and tool integration support

Your AI agents should do more than just generate text. They should act based on tasks, retrieve data, or interact with systems. A good platform enables easy and secure integration with external tools and APIs.

- Agents should be able to retrieve or update information from internal databases or business software

- Whether it’s scheduling meetings, creating tasks, or sending emails, the agent should be able to act on user intent

- The platform should allow you to connect the agent to internal APIs or utilities through function calling or tool wrappers

Look for platforms that offer:

- Prebuilt integrations with tools like Zapier, Slack, Google Sheets, or CRMs enable quick setup without heavy engineering effort

- Secure function calling to invoke APIs with appropriate permissions and safeguards, which is essential for maintaining reliability

- Plugin architecture or SDKs that allow developers to define and register custom tools that the agent can use

Memory and context management

For AI agents to respond intelligently, they must remember past interactions and use the provided knowledge base to apply that context appropriately. Both short-term and long-term memory should be easily integrated.

Choose platforms that offer configurable memory management. This allows you to define how much context is retained, when it resets, and how it integrates with external memory backends.

Guardrails and safety controls

AI agents can sometimes produce incorrect, biased, or inappropriate outputs. To mitigate these risks, platforms must support guardrails that enforce safe, reliable behaviour:

- Limit tool access by allowing agents to use only approved APIs or functions, ensuring secure and controlled behaviour

- Validate output formats using schema enforcement or regular expressions to ensure consistency

- Filter for unsafe, offensive, or harmful content using content moderation models or predefined rules

- For sensitive actions, implement human-in-the-loop mechanisms that pause the workflow until a person approves the agent’s decision

Monitoring and evaluation

To ensure agents perform as expected and improve over time, platforms must offer strong monitoring and evaluation tools:

- Track key metrics, such as latency, token usage, API costs, and error rates, to assess system health

- Collect user feedback or satisfaction scores to measure real-world impact

- Visualise conversation traces to see step-by-step execution, including which tools were called and what outputs were generated

- Logs of failed interactions or hallucinations to identify issues and improve the agent’s accuracy through the agent’s prompts, logic, or tools

Secure API and key management

Agents often need to access sensitive systems or data, so secure integration is essential. Your platform should enforce strong security practices:

- Store secrets (like API keys) securely using environment files or built-in secret managers

- Apply rate limiting, authentication, and role-based access control to protect endpoints

- Encrypt sensitive data in transit and at rest, and enable audit logging to track access and usage

- Prefer platforms that offer secure proxying for APIs and support authentication standards like OAuth, JWT, or API token management

No-code vs. code-based development

AI agent platforms come in both visual (no-code) and programmable (code-based) formats, each catering to different user needs:

- No-code tools like FME, Flowise, or Cognosys allow business users to design agents through drag-and-drop interfaces, making it easy to prototype and launch without writing code

- Code-first platforms, such as LangGraph or AutoGen, are ideal for engineers building highly customizable agents that require orchestration, branching logic, or deep integration

Some modern platforms combine both approaches, allowing teams to transition from visual flows to code as complexity increases.

Evaluation and testing capabilities

Before deploying an agent into production, rigorous testing is essential to ensure reliability, accuracy, and overall performance:

- Run simulations across everyday user journeys and edge cases to ensure effectiveness and robustness

- Test the agent’s response to adversarial or poorly structured prompts to identify vulnerabilities that could be exploited or cause failure in the real world

- Validate how well the agent selects and invokes tools, APIs, or functions in different scenarios depending on the task

Look for platforms that support automated testing suites, regression checks on prompt or model changes, and dataset-driven validation for consistent input-output behaviour.

Conclusion

AI agent platforms simplify the creation of autonomous systems. From LangChain to no-code tools like FME, there’s a solution for every team and use case. By considering factors such as LLM support, flexibility, integration capabilities, and guardrails, you can select the right platform for your specific requirements. You can build agents that are not just smart but dependable and adaptable.

Choose wisely, start small, and scale responsibly.

Continue reading this series

AI Agent Architecture: Tutorial & Examples

Learn the key components and architectural concepts behind AI agents, including LLMs, memory, functions, and routing, as well as best practices for implementation.

AI Agentic Workflows: Tutorial & Best Practices

Learn about the key design patterns for building AI agents and agentic workflows, and the best practices for building them using code-based frameworks and no-code platforms.

AI Agent Routing: Tutorial & Examples

Learn about the crucial role of AI agent routing in designing a scalable, extensible, and cost-effective AI system using various design patterns and best practices.

AI Agent Development: Tutorial & Best Practices

Learn about the development and significance of AI agents, using large language models to steer autonomous systems towards specific goals.

AI Agent Platform: Tutorial & Must-Have Features

Learn how AI agents, powered by LLMs, can perform tasks independently and how to choose the right platform for your needs.

AI Agent Use Cases

Learn the basics of implementing AI agents with agentic frameworks and how they revolutionize industries through autonomous decision-making and intelligent systems.

AI Agent Tools: Tutorial & Example

Learn about the capabilities and best practices for implementing tool-calling AI agents, including a Python-based LangGraph example and leveraging FME by Safe for no-code solutions.

AI Agent Examples

Learn about the core architecture and functionality of AI agents, including their key components and real-world examples, to understand how they can complete tasks autonomously.

No Code AI Agent Builder

Learn the benefits and limitations of no-code AI agent builders and how they democratize AI adoption for businesses, as well as the key components and features of these platforms.

Multi-Agent Systems: Implementation Best Practices

Learn about multi-agent systems and how they improve upon single-agent workflows in handling complex tasks with specialised roles, communication, coordination, and orchestration.

Langgraph Alternatives: The Top 6 Choices

Learn about LangGraph, a powerful yet complex orchestration framework for building intelligent systems, and its limitations, alternatives, and selection criteria.

Agentic AI vs Generative AI

Learn the differences between generative AI and agentic AI and how to choose the right AI paradigm for your needs.