Designing with scalability in mind is no longer optional. As organizations accumulate more data, expand into cloud and hybrid environments, and bring AI into production, the ability to architect future-ready systems is critical.

At the Peak of Data & AI 2025, we had 3 standout sessions that discussed practical strategies for future-proofing your FME deployments. Watch the recap recording here, and read on for our top takeaways.

FME Flow architecture design for modern enterprises

In the first session, Théo Drogo and Jean-Nicholas Lanoue of Consortech discussed crucial architectural choices for ensuring an FME Flow deployment will thrive at scale. Whether you’re running a small setup or rolling out FME across the enterprise, design decisions around performance, cost, and scalability are key. By planning ahead and choosing the right architecture early, you can build an environment that’s both cost-effective today and ready for tomorrow’s demands.

Top tips:

-

Start small, but design with horizontal scaling in mind. Containerized deployments of FME Flow make it easier to add capacity without major redesign.

-

Decouple core FME Flow services (engines, web services, database) so each can scale independently. For example, run engines on separate VMs or Kubernetes pods.

-

Consider whether cloud or on-premises licensing will give you the best ROI for predictable versus burst workloads.

-

Tools like load balancers, autoscaling groups, and centralized logging are essential for visibility and uptime.

- Document your scaling strategy early, even if you don’t need it yet. Define thresholds for when to add engines or scale out nodes, and automate scaling policies where possible.

Their session was full of practical advice and real-world examples for deploying FME Flow with the future in mind. By designing thoughtfully up front, performance bottlenecks and costly re-architecture are avoidable, ensuring your enterprise runs smoothly from now into the future.

Using FME and Excel to develop schemas and generate GIS feature classes and tables

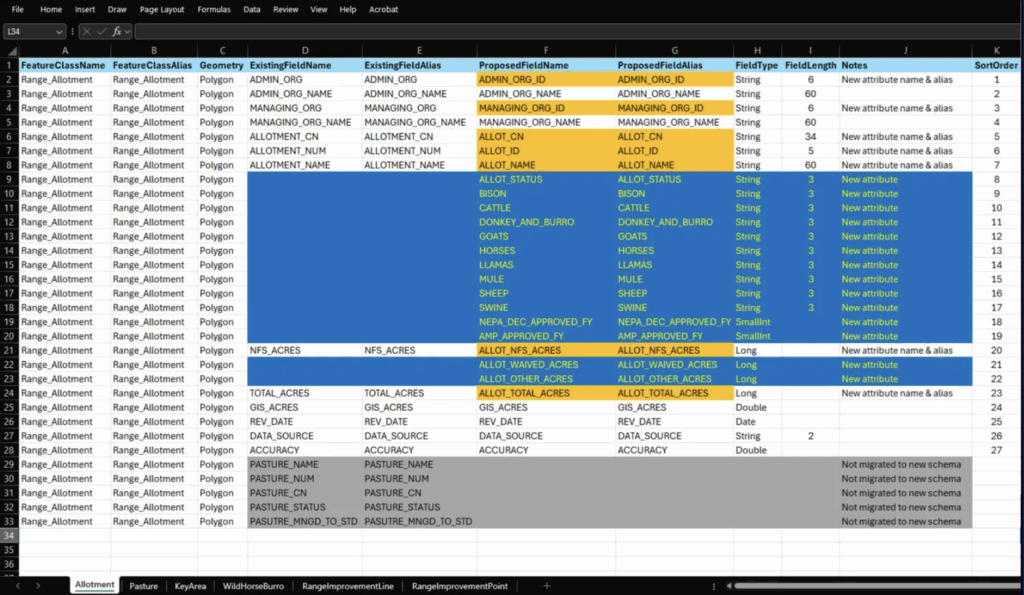

Schema design is one of the most common sources of project delays, especially when non-GIS stakeholders are involved. In the second session, Mark McCart of SAIC discussed a creative way to bridge communication between GIS and non-GIS teams: Excel.

By extending a simple Excel spreadsheet with a few extra columns, teams can design schemas in a way that’s easy to visualize, validate, and share across departments. The same file can then be used directly in FME to generate GIS feature classes and tables. This approach not only reduces errors, but also speeds up collaboration and delivery timelines. He demonstrated how Excel can be a schema blueprint, improving clarity and alignment between technical and business stakeholders.

Top tips:

-

Adding a few custom columns (field type, length, nullability, etc.) transforms a spreadsheet into a living schema specification.

-

Validate before deployment. By sharing the Excel schema with business teams, you get sign-off and prevent downstream issues.

-

Once approved, the same file can drive FME workspaces that create feature classes and tables, ensuring consistency and eliminating manual translation.

-

Standardize your schema Excel template and reuse it across projects.

-

Automate schema validation in FME: add a QA step to flag mismatches between Excel definitions and actual data.

-

Store schema spreadsheets in version control (e.g., Git or SharePoint) to track changes over time.

Treating Excel like a schema “source of truth” saves time, reduces errors, and aligns technical and non-technical teams around the same blueprint.

Extracting value from images with computer vision and GenAI

Unstructured image archives (“dark data”) grow faster than most teams can manage. Without automation, valuable insights remain locked away. In the final session, David Eagle of Avineon Tensing focused on unstructured image data, one of the most underutilized assets in many organizations.

Using FME Flow, he demonstrated how to integrate computer vision and pre-trained machine learning models to automatically tag, classify, and connect images to systems like CRM or DAM platforms. This transforms dark data into actionable insights at scale.

He gave a generative AI demo that showed the “power of the prompt” in extracting meaning from images. The combination of automation and AI opens exciting new possibilities for unlocking value from growing image archives.

Top tips:

-

Pre-trained ML models can classify, tag, or detect features in imagery, automatically enriching metadata.

-

FME Flow can push extracted metadata into CRMs, DAMs, or spatial databases, linking unstructured images with structured records.

-

Prompt-based tools can summarize or describe image content, extending the value of ML-based tagging.

-

Batch-processing pipelines, GPU-backed infrastructure, and asynchronous execution are critical when working with large image collections.

-

Use pre-trained models first (e.g., object detection, OCR) before considering custom training — this accelerates time-to-value.

-

Combine ML outputs with spatial attributes: geotag images and load into spatial databases for richer queries.

-

Monitor accuracy. Store confidence scores with results, and design workflows that flag low-confidence outputs for human review.

Automating image analysis with FME Flow transforms image repositories from “storage costs” into “searchable assets,” which is a key step toward operationalizing AI at scale.

Learn More

Scalable design is about building systems that evolve with your organization. We hope these presentations help and inspire you to equip your organization for long-term success with FME. To learn more, check out the following resources: