Deploying AI across an organization comes with many unknowns and challenges. Where do you even start? Which model do you choose? Can it handle complex data? How do you scale prompting so it’s useful for enterprise use cases? What about data privacy and compliance?

In this post, we’ll address these questions and share some tips for successful, scalable AI deployments, including:

- Choosing the right AI model

- Integrating AI with various data types

- Deploying AI securely and cost-effectively, whether on-premises or in the cloud

- Scaling prompts into automated workflows

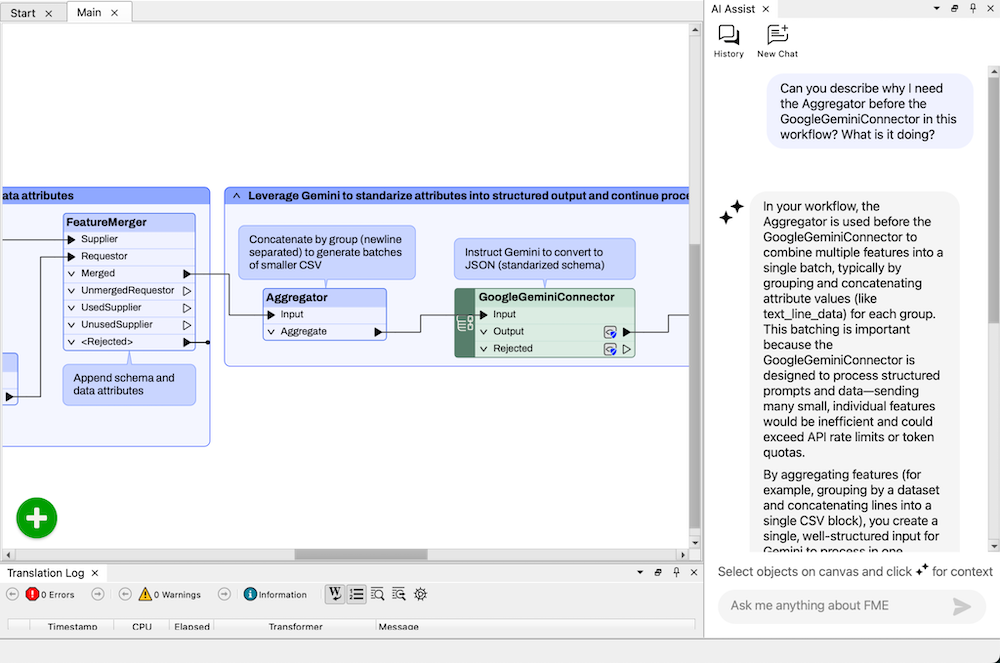

We’ll discuss how to deploy AI using FME as the orchestrator. FME can integrate AI models with enterprise data securely, scalably, and with no coding involved.

For a deep dive on this topic, watch our webinar, 5 Things to Consider When Deploying AI in Your Enterprise.

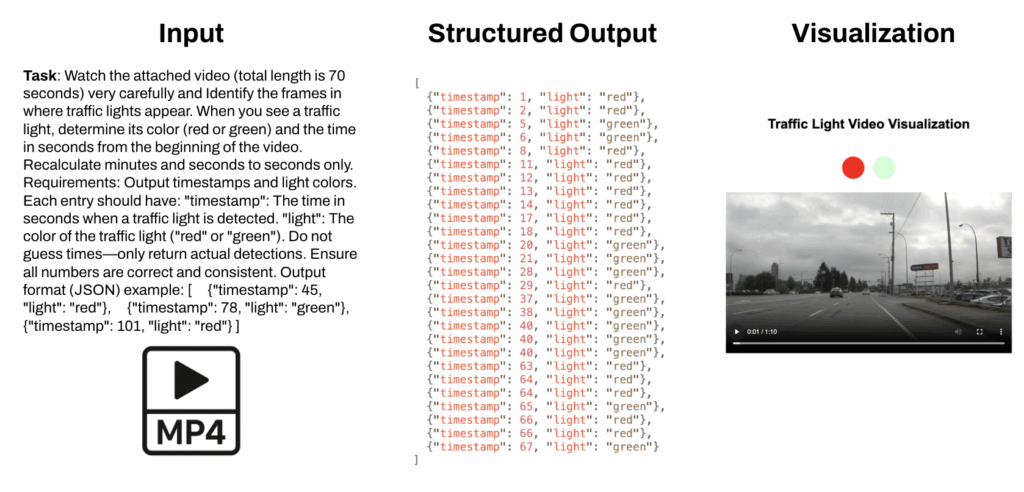

Embrace multimodal AI to work with various data types

AI models can process images, videos, audio, PDFs, and more, opening more doors for workflow automation. For example:

- Submit videos for object detection or speech analysis

- Extract tables from scanned PDFs

- Analyze spatial data in augmented reality (AR) applications

- Chain together tasks like classification, insight extraction, and tagging

FME’s connector transformers (e.g., for OpenAI, Google Gemini, Ollama) make it easy to connect to various AI models, allowing you to work with whichever model best suits your needs. Within FME, you can also connect to large datasets, apply tools like web search or vision analysis, and manage errors with retry logic.

Understand Chat AI vs. Data Integration AI

Most people are familiar with chat-style AI tools, but in enterprise settings, the real power often comes from structured, repeatable processes.

Chat-style AI is interactive, human-driven, and multi-turn.

Data integration AI is about using models to generate structured outputs that can be reused and processed at scale.

With FME, you can add AI to your automated workflows for:

- Schema mapping

- Data classification

- Entity extraction

- Standardizing inputs (e.g., names, acronyms)

- And more

How to choose the right AI model

There’s no one-size-fits-all AI model. The best model depends on what you’re trying to achieve—text summarization, image recognition, classification, reasoning, etc.

In FME, you can connect to various AI services, including OpenAI, Google Gemini, Amazon Bedrock, Azure OpenAI, and more. You can:

- Use marketplace models on platforms like Hugging Face

- Chain together services (e.g., use a low-cost model for classification, then a more powerful one for reasoning)

- Switch models as needed without rebuilding your entire workflow

Flexibility is key in a fast-moving AI landscape. What’s best today might not be best tomorrow, so it’s important to employ tools like FME that help you stay agile.

Consider cloud vs. on-premises deployments

Security, cost, and data sovereignty are central to any AI strategy. Whether you deploy in the cloud or on-premises depends on your organization’s infrastructure and governance needs.

- Cloud is ideal for scalability and access to the latest models. It’s easier to start with and can handle massive workloads.

- On-premises gives you complete control over your data, which is crucial for regulated industries and sensitive information.

- Hybrid setups are increasingly popular, letting you use the cloud for general tasks and on-premises for private or critical data.

FME supports all three approaches. You can even orchestrate hybrid deployments using remote engine services and automations to control where and how jobs run.

For scalability, use batch processing and reusable prompts

Prompt engineering is arguably the most important part of AI success. Since manual prompting doesn’t scale, you’ll need to use FME to create data-driven prompts using dynamic attributes, user parameters, and deployment parameter stores. In FME, you can:

- Concatenate prompts based on incoming data

- Reuse prompts across projects and teams

- Store prompts securely with deployment parameters in FME Flow

Structured outputs (like JSON) make it easy to process results downstream, whether you’re writing to a database, generating reports, or powering dashboards.

Batch processing lets you move beyond experimentation to true production-scale AI.

Get started with AI for your enterprise

AI is a powerful enabler, but smart implementation is critical. By following the above tips and using FME to orchestrate your AI workflows, you can:

- Work with all data types, including text, spatial, images, audio, video, PDFs, and more

- Deploy AI in the cloud, on-premises, or both

- Choose the best AI models for your needs

- Automate and scale your workflows with confidence

Whether you’re building your first proof of concept or rolling out enterprise-wide automations, we’re here to support your journey.