This work was done in collaboration with Safe Software partner Locus.

The FME Platform is a powerful solution designed to tackle complex data and integration challenges within organisations. Unlike traditional code based tools, FME’s visual interface makes it more accessible and user-friendly, while still offering the advanced capabilities needed to handle sophisticated workflows.

From small scale tasks to enterprise solutions, FME provides the flexibility and robustness to meet diverse needs. However, regardless of size or scope of the project, there are key considerations that can make the difference between a good solution and a truly great one.

Leverage FME Reusability

FME offers an extensive array of transformers and support for nearly any data format, making it one of the most flexible platforms for data integration. This flexibility enables organisations to create highly customizable workflows, integrating systems and data in limitless ways.

As organisations grow, they often find themselves performing similar tasks across multiple workflows; whether it be, reading or writing data, transforming data, triggering processes in external systems or enforcing business rules. And, while these tasks can be recreated in individual workspaces, a more reliable approach is to leverage FME’s enterprise capabilities.

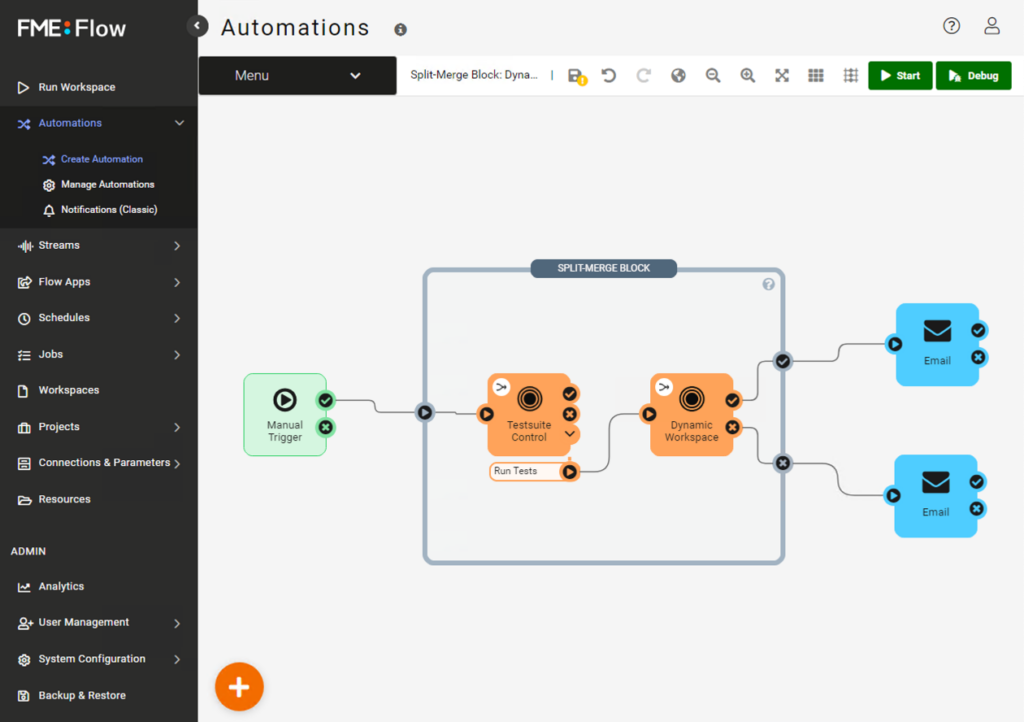

By utilising features like shared custom transformers and FME Flow Automations, you can centralise the logic for these repetitive tasks. This approach offers several benefits:

- Centralised Management: Changes, such as updates to a REST API, or modifications to business rules, can be made in a single location.

- Consistency: Repeatable and reusable logic ensures that workflows remain consistent across the organisation.

- Ease of Maintenance: Instead of identifying and updating every workspace affected by a change, you can update the shared logic in one place.

For example, a custom transformer used across multiple workspaces or an automation managing shared logic simplifies updates and reduces redundancy. This modular approach not only saves time but also enhances the reliability and scalability of your FME processes.

Mixing Event Based and Bulk Processing

FME Flow is a dynamic and powerful platform that supports various integration patterns, allowing you to tailor processes based on triggers, data flow, frequency and volume. These methods generally fall into two categories: batch processing and continuous data ingestion.

Understanding the business requirements driving this solution is crucial. What role does the incoming data play and what insights need to be extracted? Answering these questions ensures you build a solution that solves the underlying problem.

While the choice is not always binary, batch processing typically involves scheduled, periodic data handling. Examples include weekly updates to cadastral data or daily reports on asset performance.

In these scenarios, FME periodically reads data from external sources at defined intervals, resulting in a more predictable and structured process. In contrast, continuous data ingestion, caters to real-time or on-demand data flows. Examples include location pings from assets, or periodic measurements from remote sensors. Here, data is pushed to FME and processed as it arrives, supporting fluctuating and immediate needs.

An important distinction between these paradigms lies in how the data is handled during triggering. For batch processing, a simple ‘tap on the shoulder’ is sufficient; the process itself discovers the data it needs to operate on. In contrast, event or stream based workflows typically include the data to be processed as part of the trigger.

Carelessly mixing these paradigms can result in inefficiencies and risks—for instance, using an event-based message as a trigger but then having the process read, compare, and write large volumes of data.

In the best case, this additional overhead consumes resources on FME Flow. In the worst case the overhead degrades system performance, affecting other workloads or even system stability.

Technical Debt

Technical debt is like a shortcut that can lead to future problems. In software development, it refers to areas of code that need improvement but are neglected due to time or budget constraints.

Technical debt is sometimes unavoidable. The key is to plan for, be aware of and document it. Time constraints, budget limitations and dependencies on upstream or downstream components can all contribute to the introduction of technical debt to your FME solution.

Fortunately, the way FME solutions are developed makes it easy to document and communicate technical debt effectively. Features such as bookmarks, annotations and workspace descriptions provide a clear framework for outlining areas of improvement and how to address them.

However, documentation alone is not enough. It is equally important to have a plan in place to tackle technical debt once constraints are removed. Maintain a technical debt log, which is periodically reviewed and assessed, can ensure improvements are prioritised and progress is made over time.

Oversight, Governance and Standardisation

Oversight and governance are often overlooked in projects, yet they are essential for standardisation to succeed. Both the business and project teams must actively implement and embrace these practices.

There is no one-size-fits all approach to implementing structures around FME within organisations. The design of these structures depends on how FME is used, its role within the business, who has access to it, and the expected outcomes from the broader system.

Standardisation plays a key role in ensuring consistency and efficiency. Establishing conventions for workspace naming, web connections, database connections, custom transformers and workspace parameters helps maintain clarity and order in FME processes across the organisation.

Governance and oversight extend beyond standardisation. They include monitoring what processes are running, when and where they are executed, and who is accessing and using FME Form and FME Flow. These enable regular performance reviews, ensuring that:

- Systems are running reliably and efficiently

- Technical debt is identified and managed

- Obsolete processes are retired

- Users have appropriate access to the tools and resources needed to fulfil their roles effectively

Ignoring Scalability and Performance

While scalability and performance could be considered part of technical debt, they warrant special attention. Designing with the future state of your data, processes, and system in mind is critical. Anticipating natural growth and accounting for it during the initial design can save significant time and resources later, reducing technical debt.

Testing for performance in development and staging environments is an important step, but it’s not enough. If you don’t design with current and future data volumes in mind, performance bottlenecks are likely to emerge as data grows.

Consider for a moment, a process that runs for five minutes every hour might not scale effectively as data volumes and business requirements increase. As the runtime extends, this can have ripple effects on dependent processes as well as the broader system. Understanding these potential impacts up front is essential to ensuring longevity and reliability.

It is also important to remember that FME often operates as part of a larger system. Ensure that external components – like databases – are optimised as well. Are indexes updated? Are temporary drives cleared? Is data stored appropriately?

A well-maintained system outside of FME is as critical as well designed workspaces within it.