How to build computer vision workflows with Picterra + FME

The term “Computer Vision”, or CV, has long left the pages of scientific papers and the presentation slides of specialized conferences. It is used more and more in everyday life – from cars to smartphones.

Here at Safe Software, we couldn’t overlook this exciting technology. Our first experiments with CV started as a fun project during Safe’s Innovation Daze in 2018, and grew into a set of FME transformers that use OpenCV for training CV models and detecting objects on photos.

We demonstrated the technology during the FME WT 2019, showing how FME can detect road signs and fire hydrants, but most of our customers wanted to know whether Computer Vision could be used with geospatial imagery. This makes perfect sense, as many organizations deal with a lot of aerial, satellite, and drone images, and often need to spend hundreds of hours going through the orthos, looking for changes, identifying new objects, tracking lost entities, and updating their datasets with this new information.

After more experiments, we found that FME works really great where it has always been strong: preparing data for CV tools, and transforming the results into usable formats and products. In terms of the detection itself, this is where our featured guest, the Swiss company Picterra, adds real value to the workflows involving extracting information from imagery.

What is Picterra?

What is Picterra and how it can help our customers? I posed this question to Monika Ambrozowicz, Product Marketing Manager, and here is what she told me:

“Picterra is a cloud-based geospatial platform that enables users to identify objects and patterns (road cracks, damaged roofs, solar panels, animals, etc.) at scale, anywhere on Earth. What makes Picterra unique is that our users build and deploy unique actionable and ready-to-use deep-learning models based on their own data. The platform is also not limited to specific use cases. In fact, it’s safe to say that if you can see something in your imagery, you can detect it with Picterra. And all of that is made without a single line of code and with only a few human-made annotations, in a matter of minutes.”

How FME and Picterra work together

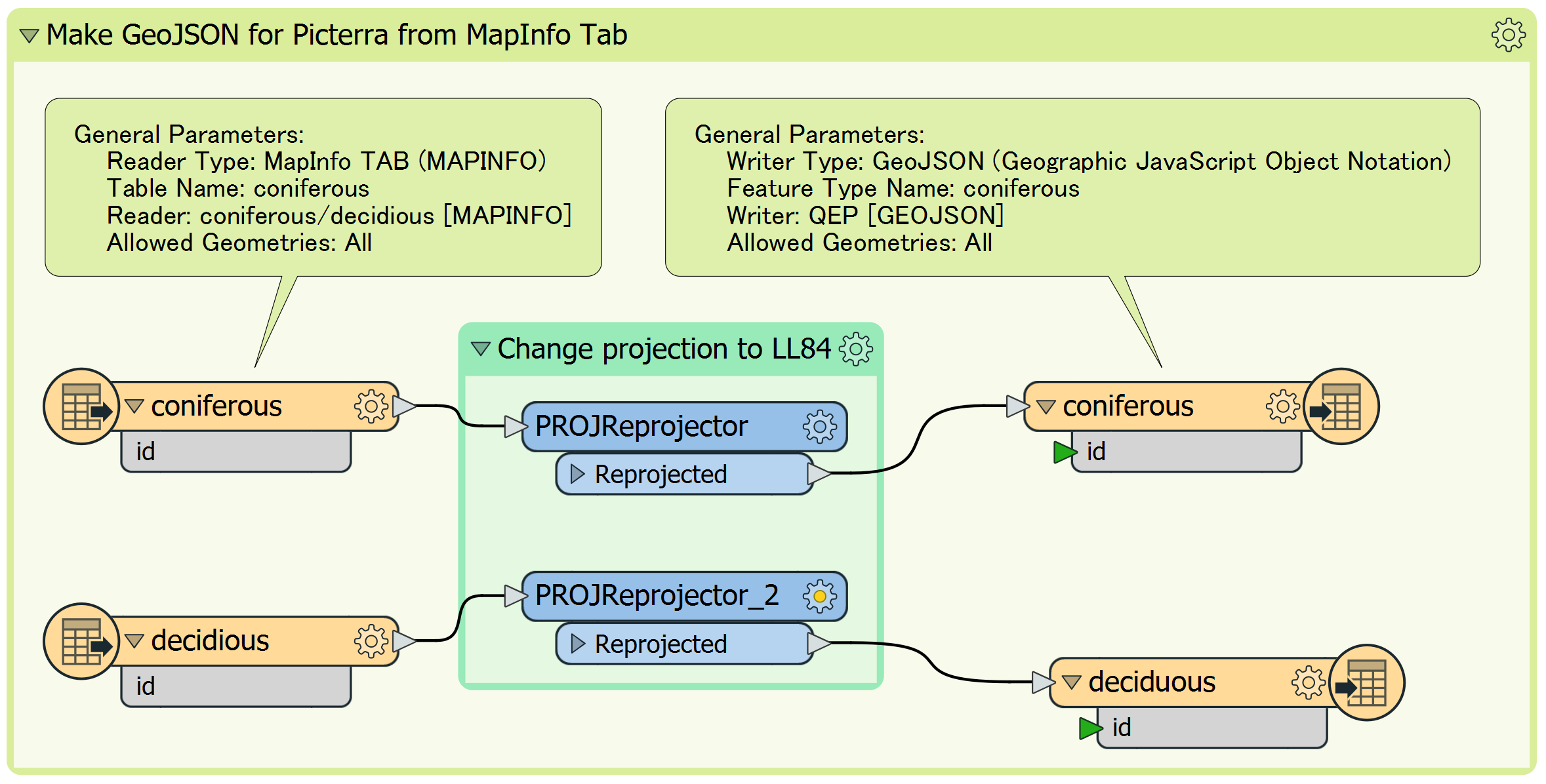

You can use FME to read and write almost every geospatial vector format, from the extremely popular shapefile to national standards to legacy data. FME can also transform any of these formats to JSON and upload it to Picterra as a training dataset.

The results generated during detection are JSON as well, and with FME, you can easily and flexibly integrate this data into existing datasets – again, in any format. Moreover, the data can be enriched with additional attributes, geometrically validated, and analyzed against the existing layers.

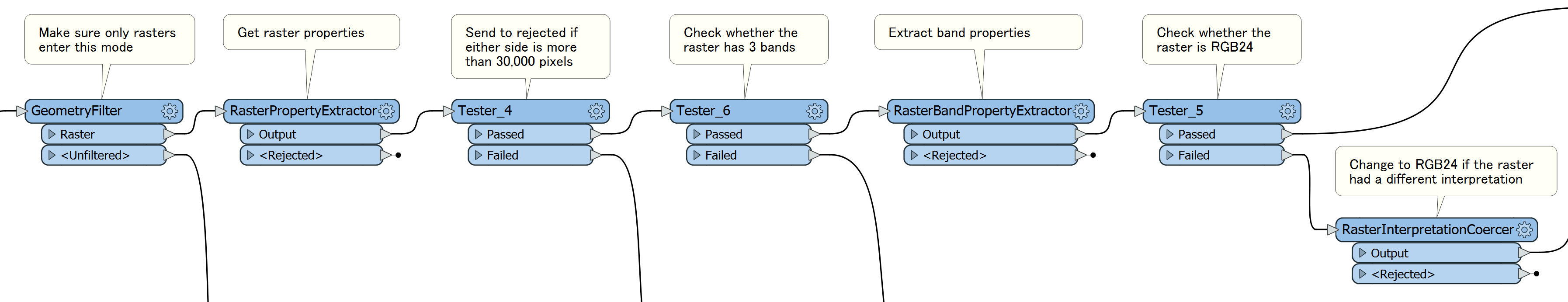

Transforming rasters is another area where FME is really strong – from changing raster interpretation (for example, RGBA64 to RGB24) to assembling and rearranging bands, from resolving palettes to per-pixel manipulations, from simple tiling to clipping with vector polygons. After pre-processing your imagery, the rasters will be uploaded to Picterra in the optimal representation for faster processing and best results delivering the best value for each pixel.

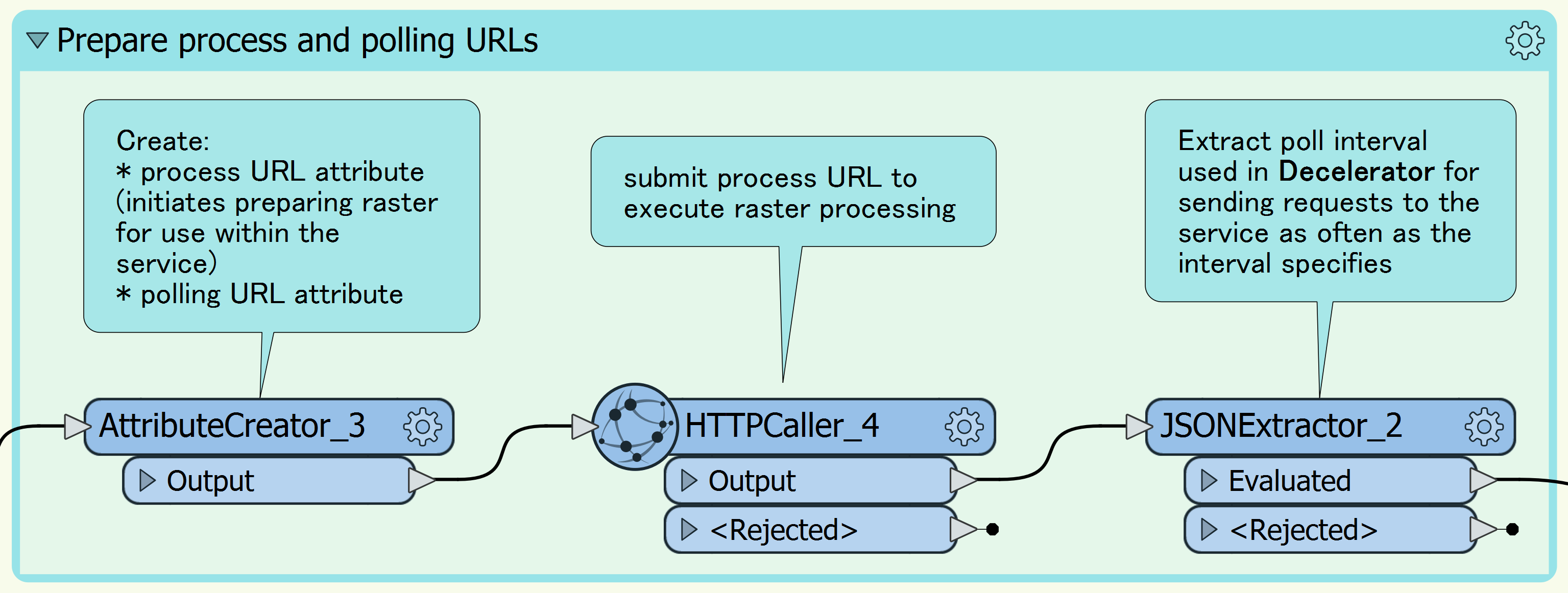

FME also can “talk” to the web via the HTTPCaller. This is how we connect to the Picterra API. FME uses HTTPCaller to submit all kinds of data processing requests – from uploading rasters to vector downloading.

I built a custom transformer, PicterraConnector, that performs these requests.

The usage pattern is the same for all operations: create a request URL with the AttributeCreator, send it to the Picterra service with the HTTPCaller, get back JSON, extract values into attributes with the JSONExtractor, and then you can analyze the response with the Tester/TestFilter.

Because it’s a custom transformer, it is easy to see inside the PicterraConnector (right-click on it, and select “Edit…”) and adjust the transformer to a particular project as necessary.

How to begin working with Picterra within FME

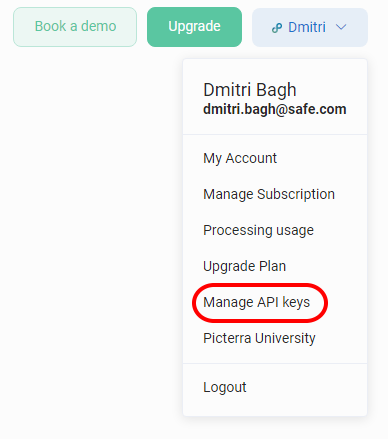

1. Sign up here and get an API key, which is a mandatory parameter for the PicterraConnector.

The trial account has some limitations, but it allows you to fully explore the detection capabilities of the platform. Training has to be done through Picterra’s web interface, or try public detectors with your data!

2. Add Readers to bring some images into your FME Workspace, use raster transformers to make them RGB24 rasters, and reproject them to EPSG:4326 (LL84). For best results, ensure you don’t have rasters bigger than 30,000 by 30,000 pixels. Larger rasters still can be processed, but they will be resampled, so it makes sense to stay in control and properly transform them ahead of time.

3. Download the PicterraConnector from FME Hub by beginning to type it on the FME Workbench canvas, and add it to your workspace. In the transformer parameters, select what you want to do – upload an image, see available rasters and detectors, or run a detection. Make sure you set the API key.

4. Depending on the operation, this transformer will return the list of available detectors or rasters, just a message about a success or failure, or receive vector geometries of the detected features. After this transformer, you can keep doing all the traditional FME things, from transforming the data in every possible way to saving to a format of your choice.

Example: finding pavement cracks

In order to see how Picterra and FME really work together, I decided to reproduce the road cracks project. A lot of our customers could certainly find this scenario very useful.

1. Prepare the data

For the normal streets, the resolution of the imagery should be really high – a centimeter or so. I found an area in the Lower Mainland of British Columbia with much bigger cracks where 7.5 cm imagery worked quite well: YVR, Vancouver International Airport. The ECW image was provided by Carlos Silva, an enthusiastic FME user and a good friend of Safe Software. I should underline that the runways at YVR are in perfect shape and I wasn’t able to find any areas that would be suitable for testing. All my test areas come from less used taxiways and aprons.

The source image covers a big area around the airport including not only YVR, but also parts of Vancouver and Richmond. The total size of the image is 2.5 GB, 159350 x 89500 pixels – way too much for an upload and detection, considering our goal.

I visually selected a few areas that were covered with cracks, around which I drew rectangular areas of interest and clipped them from the original ECW. The interpretation of the ortho was RGB24, so I didn’t have to change that.

2. Train the detector

Using the PicterraConnector, I uploaded the clipped rasters to the Picterra platform, where I carefully drew a few polygons depicting the cracks:

I also created a few areas with no cracks (negatives), and more cracks for measuring accuracy. The object outlines can be also created in any CAD or GIS package and uploaded as JSON to the service, I tried this approach with a few other projects. Then I ran the training, and my detector was ready for use with FME. For more information about training detectors, refer to the Picterra website.

3. Run the detection

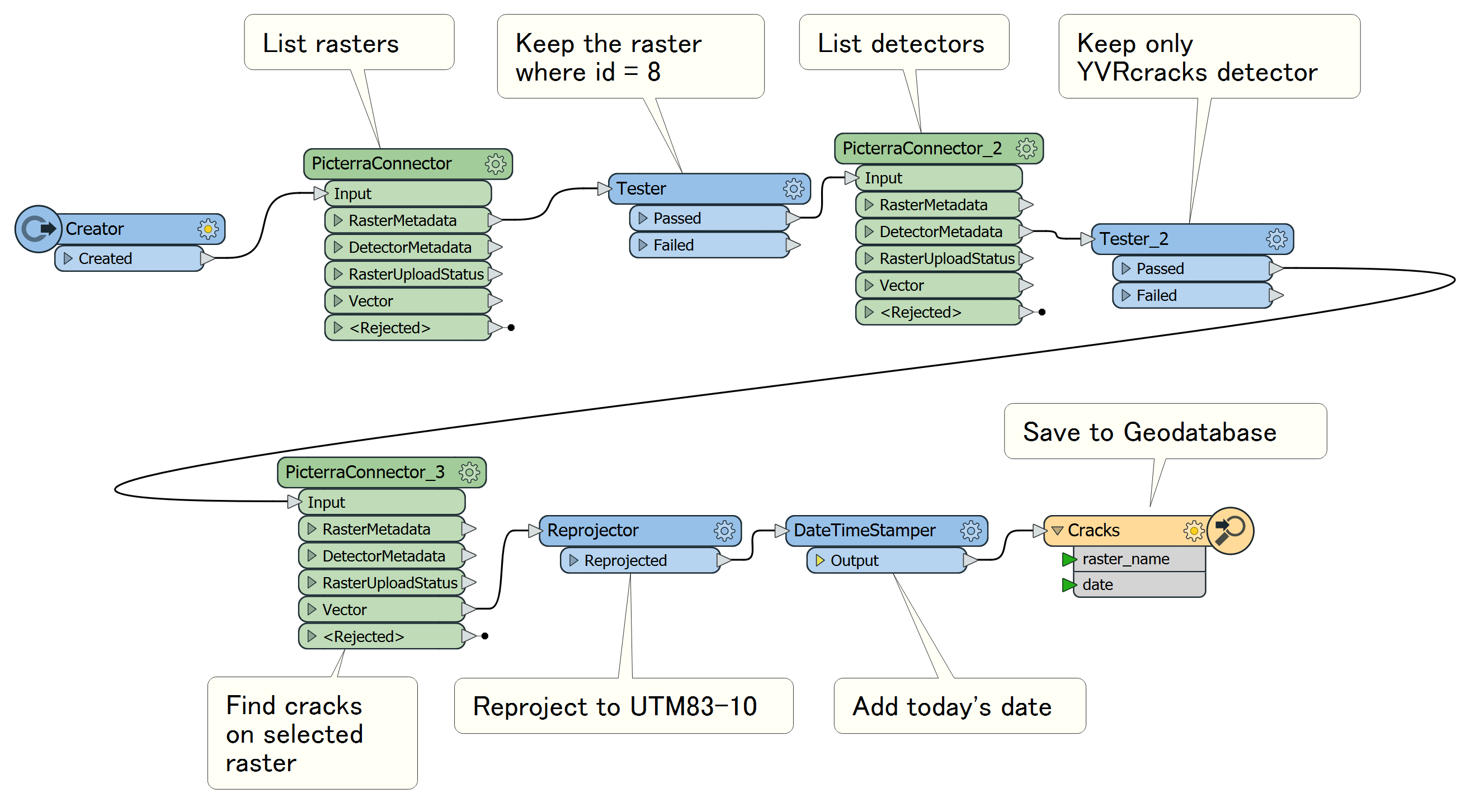

Back to FME. With the detector trained, I had everything needed for processing data: I could upload more rasters, and run the detection. A simple workflow for this includes three PicterraConnector transformers.

The first two list all the available detectors and rasters, and with Testers I select the detector and the raster (or several rasters) I want to use for this scenario. The last PicterraConnector executes the detection, and after a while, returns vector geometries of the objects found.

Picterra sends back the geometries in GeoJSON format, but once the features leave the transformer, their format does not matter anymore – it can be anything a user may want.

4. Report the results

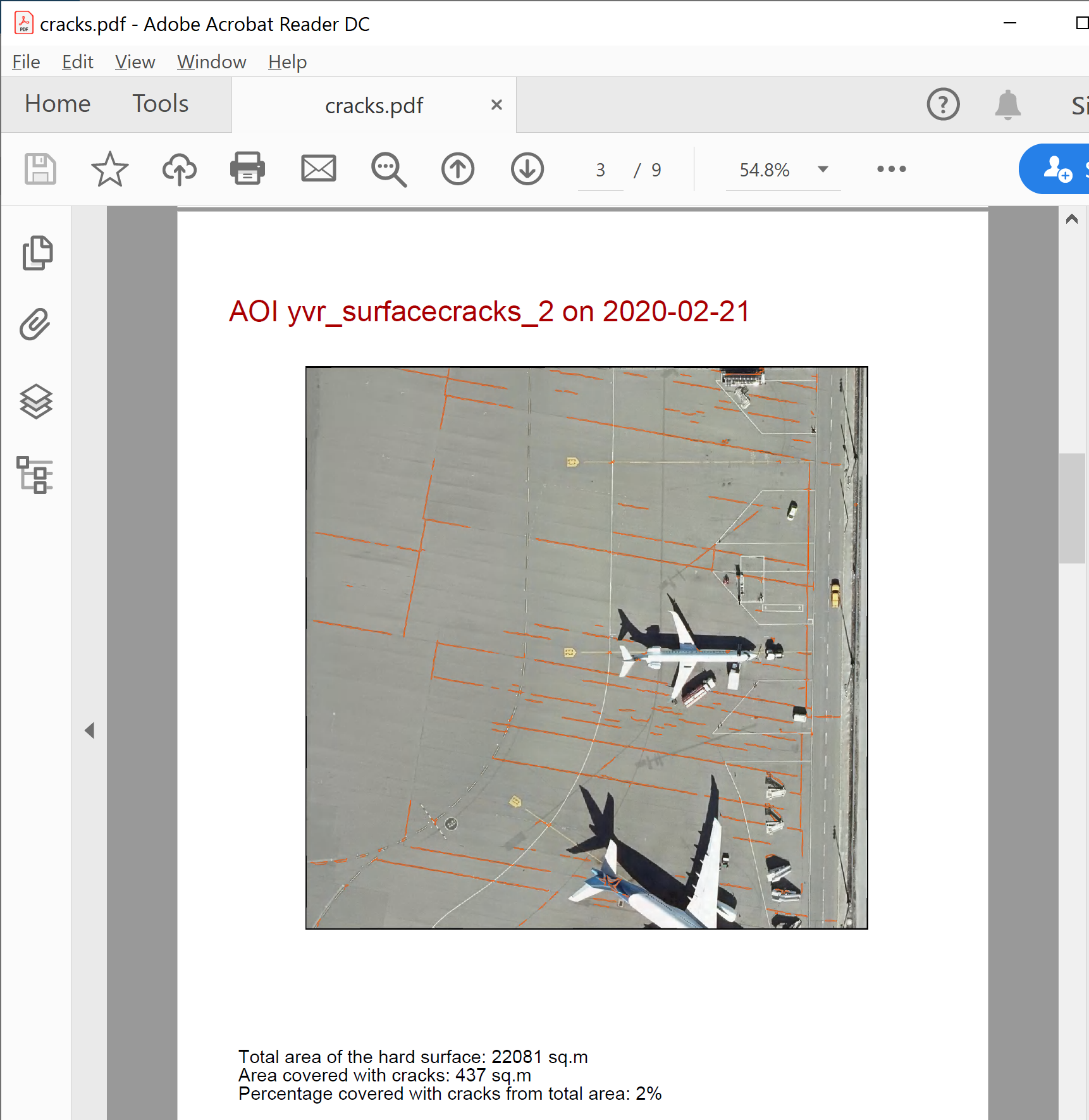

Strictly speaking, talking about FME generating reports is outside the scope of a blog about computer vision; however, the detection only makes sense if it is used in a more general workflow. For example, we may want to know how much of the overall pavement area is affected by cracks, and in knowing that, we could decide whether we need to send a brigade to fix the problem area.

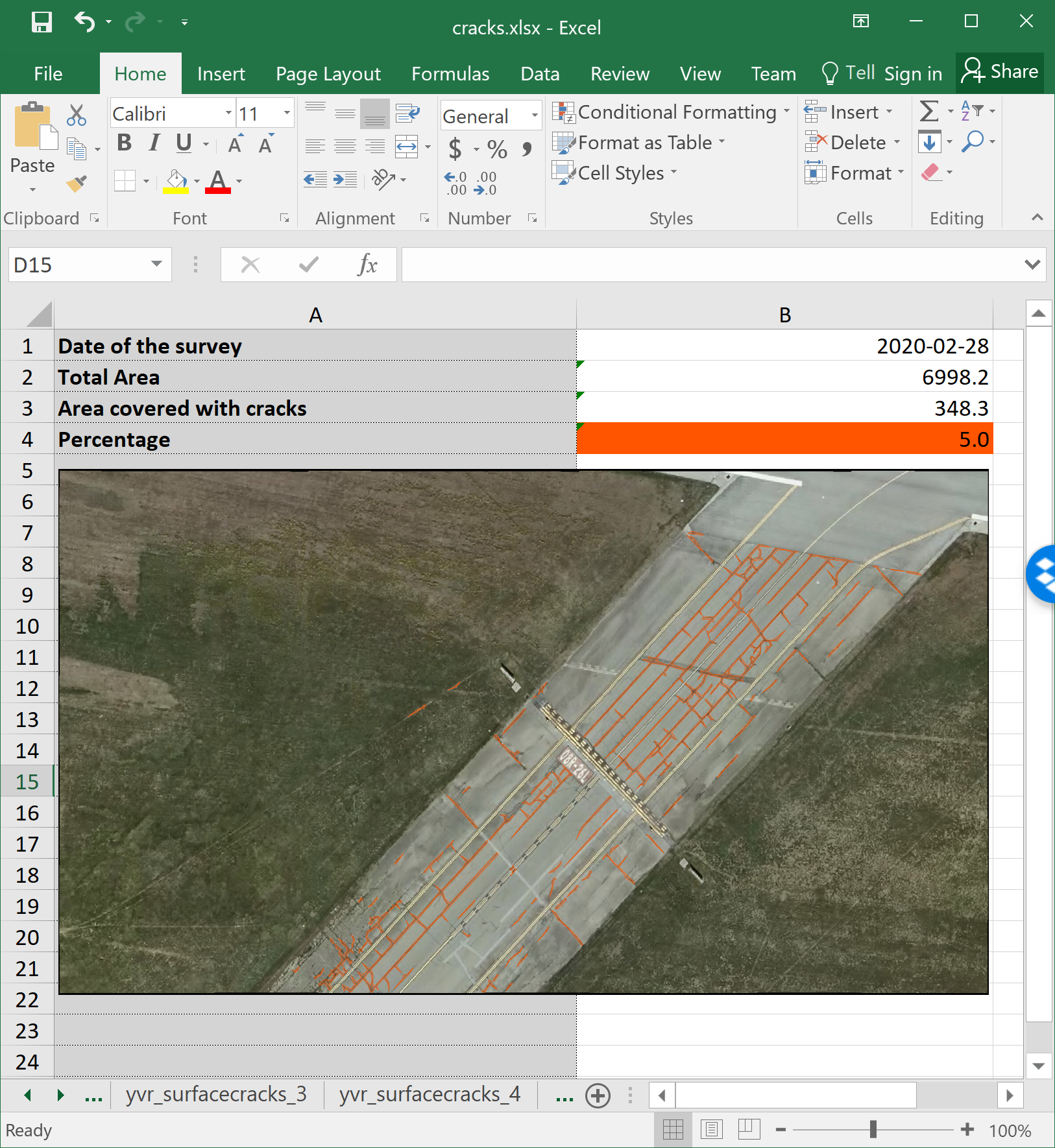

For this purpose, I added one more dataset to the workflow: the actual pavement layer (not included into the download package, the demo workspace will work with the entire area of the rasters). I measured its area, measured the area of the cracks, and calculated the percentage that cracks occupy on the pavement. Having all the data I need, I only had to create the useful and nice-looking reports.

A series of transformers allowed me to create two reports:

- MapnikRasterizer makes images of the areas I was working on with the crack overlay.

- AttributeExploder is perfect for transposing tables, that is, turning columns into rows.

- ExcelStyler with conditional formatting in the “Fill -> Background color” parameter controls the color of the percentage cells, which I distributed from green for surfaces in good condition through yellow and orange to red as the percentage of the cracks on the pavement grows.

- PDFStyler helps with styling the elements of the pages when the output goes to PDF.

- Finally, CityworksConnector allows creating a work order in Cityworks that initiates the repair process.

The steps above cover the complete process from the source image through the detection to the final reports.

5. Automate the workflow

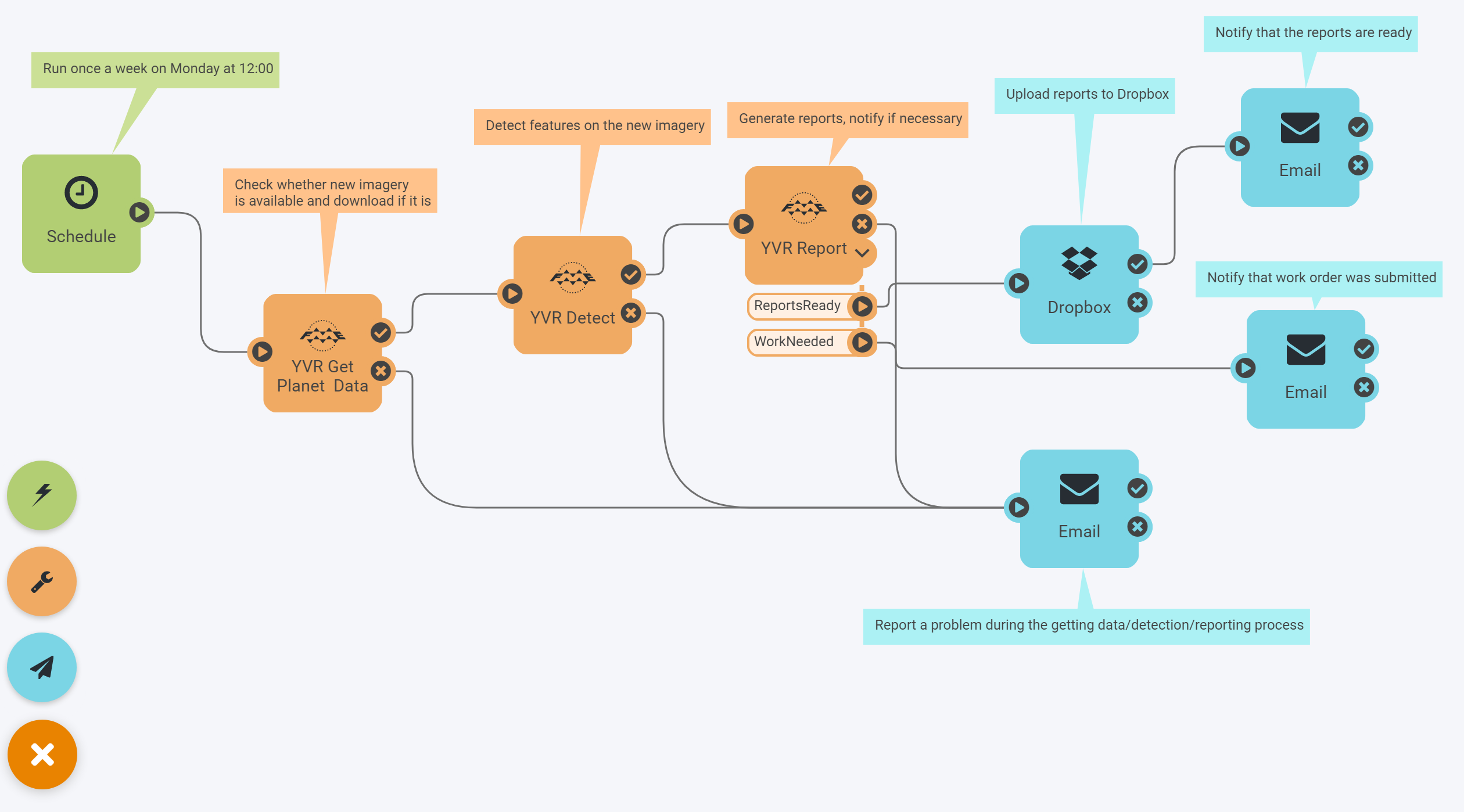

Of course, doing these steps once is useful, but the real value of FME manifests itself through automation. Once the workflow is defined, it can be deployed on FME Server on-premises or in the cloud, and fully automated. If new images arrive regularly, the process can start on a schedule. For example, Sentinel-2 and Planet imagery comes really frequently (from about once a week to daily world coverage), so for these providers we can set up a weekly or daily runs, process the data, upload the reports to Dropbox, and send email notifications:

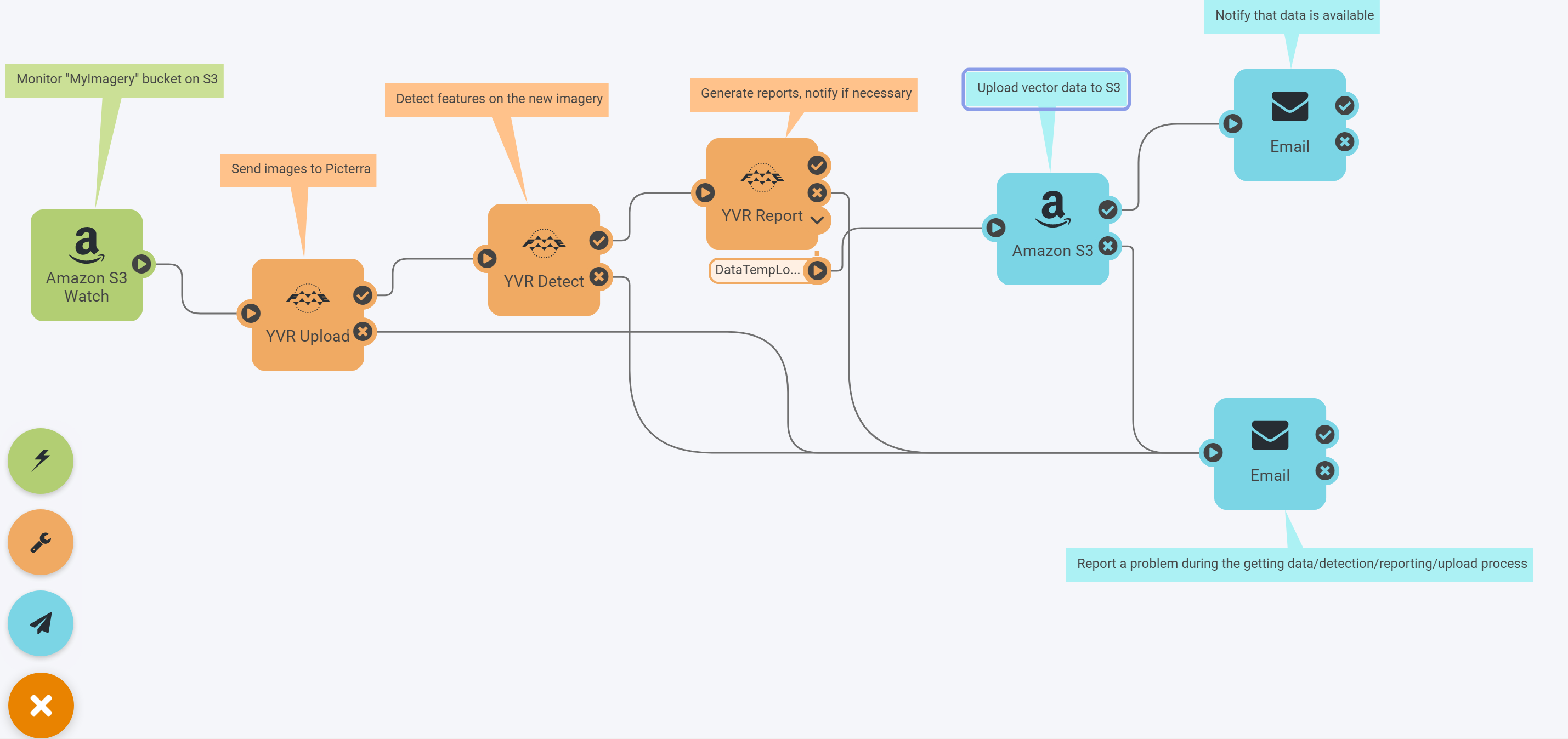

If new images come irregularly, we can set it up to monitor a certain location on the web, for example, an Amazon S3 bucket. A file or files appearing in this bucket will trigger the transformation, and once it’s done, the vector results will go to S3 as well:

An expanded scenario could include, for example, the series of transformers from another Safe Software partner, Pix4D – the drone imagery will be turned into an orthomosaic, which could go to an S3 bucket, and the automation above would pick it up to the end of the process.

Saplings and seals – what else can be done in Picterra

Picterra is not limited to detecting road cracks or other elements of city infrastructure. The platform offers endless possibilities and it’s safe to say that if you can see an object on a drone, aerial, or satellite imagery, Picterra can detect it. That’s because Picterra’s deep learning architecture doesn’t need to change to adapt to different applications – it simply needs data to learn.

For example, if you need to detect buildings, you need to define how you understand a building. While there is no universal building detector on Picterra, the platform is efficient at learning from training models, so it can learn to detect various types of buildings, including informal settlements, slums, or refugee camps. It can also differentiate between a building and an ongoing construction.

Picterra interface showing the results of detecting buildings and constructions. View online

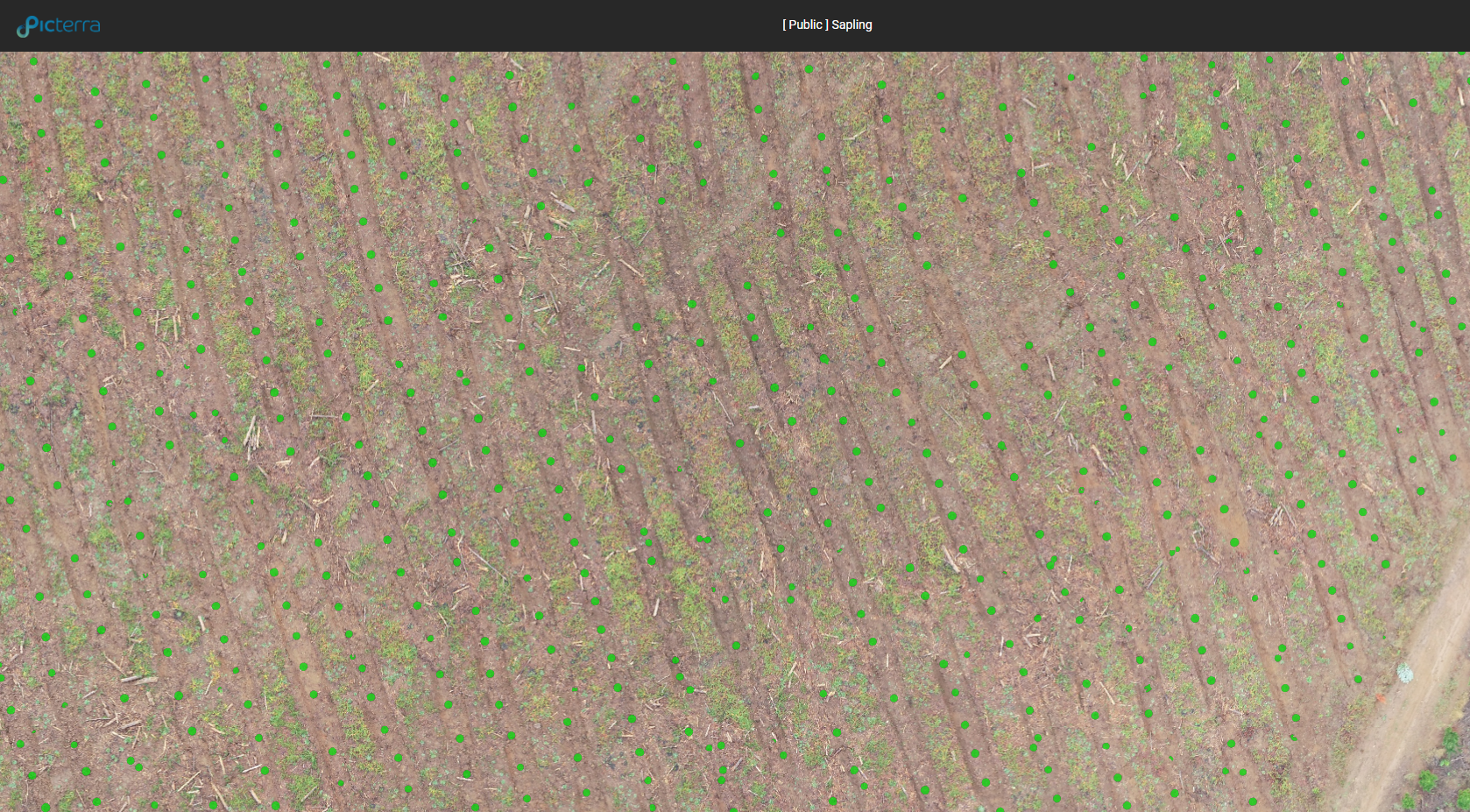

Picterra is also widely used in forestry management. The Machine Learning model can be trained to detect healthy trees, plus those that are dead, fallen, or saplings.

Viewing detected saplings in Picterra.

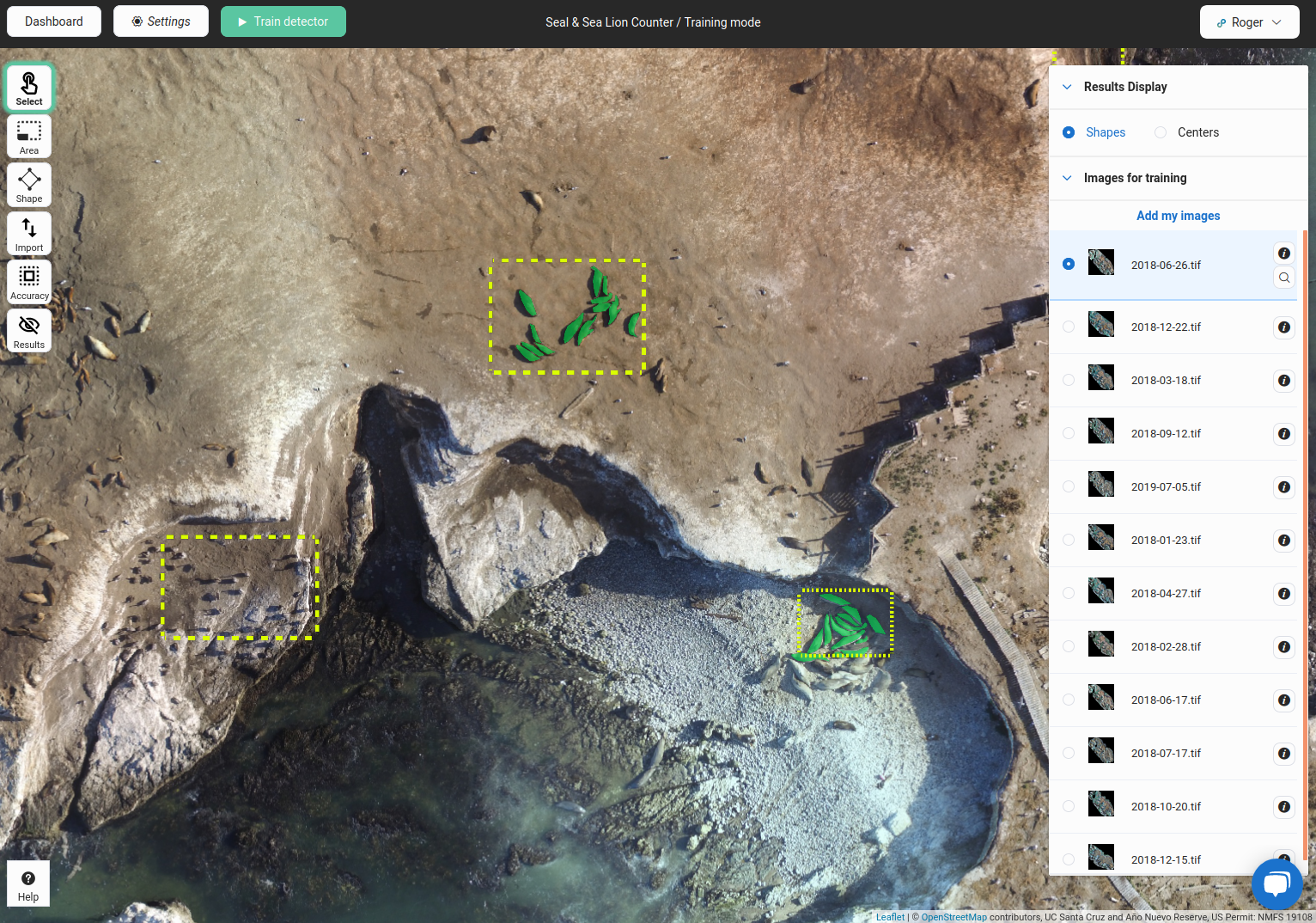

Another interesting application of Picterra is wildlife monitoring. Researchers from the University of Santa Cruz, California, have used the platform to analyze the population of seals and sea lions living on the Año Nuevo island, which is a part of the Año Nuevo Reserve off the shore of California. With Picterra, they were able to achieve the goal in 8 hours, compared to 35 days (!) of manual work. View final shared project results.

Viewing CV training areas and annotations of seals in Picterra.

Try it yourself

Connecting two powerful tools expands possibilities for both FME and Picterra users. Unprecedented format support, transformation capabilities, and automation combined with the strength of AI in computer vision gives you a chance to quickly and reliably get up-to-date information from your imagery.

What would you like to find in your imagery today?