Preserving the history of Amache using AR & virtual worlds

The University of Denver Amache Project is dedicated to researching, interpreting, and preserving the site of Amache, a WWII internment camp for Japanese-American citizens. Jim Casey, a GIS Specialist from the University of Denver, had been working on this project for a few years when he approached Safe Software with a question: can he render the data he collected in augmented reality?

Our answer, of course, is yes. And when we talked about how FME AR app could be used for the project, I suggested trying a couple of additional visualization options – CesiumJS for sharing a 3D scene over the web, and Unreal Engine for making an immersive game-like environment.

The source data included almost everything a typical GIS specialist works with: CAD and GIS layers, ortho imagery, 3D models, historic map scans, photos and videos. The output was the most interesting part: three very technologically different, but visually appealing, ways to present the traditional data. Below, I talk about the details of this project, go through the data and the workspaces involved, and show the resulting models and worlds.

Step 1: Source data from drones, CAD floor plans, and more

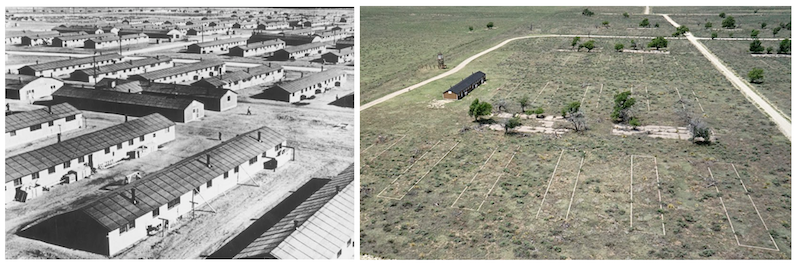

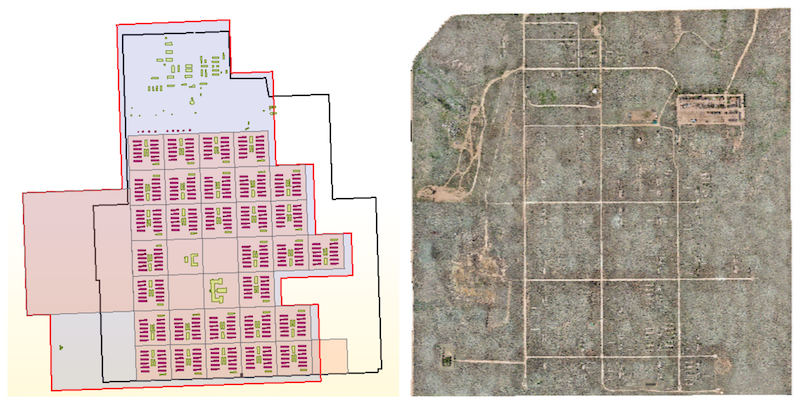

Today, the Amache site looks very different than 70 years ago – the old buildings are gone, only the foundations still remain in the ground. There is no barbed wire around the site. The roads, small trees, and dry grass make it feel empty and quiet. There are a few restored objects – a barrack, a water tower, and a guard tower. There is also an information kiosk.

Jim Casey sent me a lot of data related to the site, and my goal was to use FME to bring all the sources together and generate the site as existed during WWII. Here are a few of the source datasets:

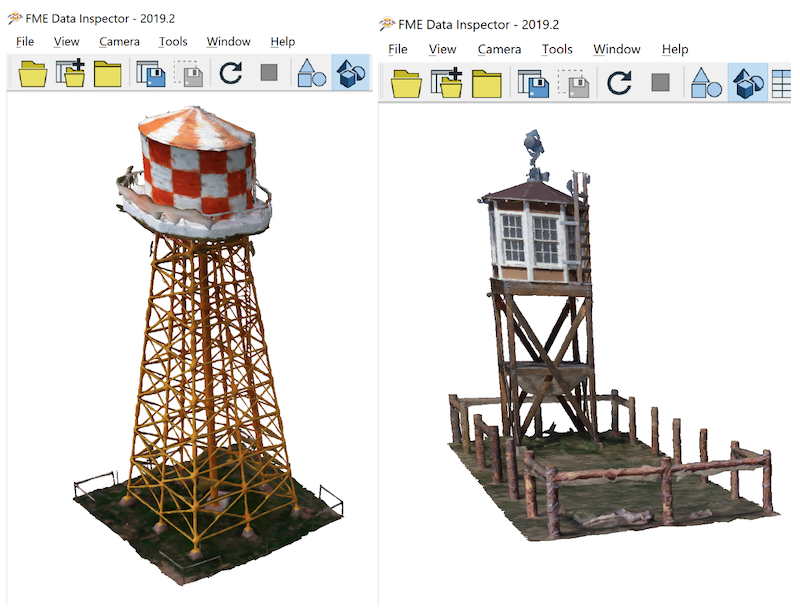

OBJ models generated from drone footage. Using Pix4D, which we’ll talk about below, Jim turned a few hours of drone footage of restored constructions into 3D .obj models.

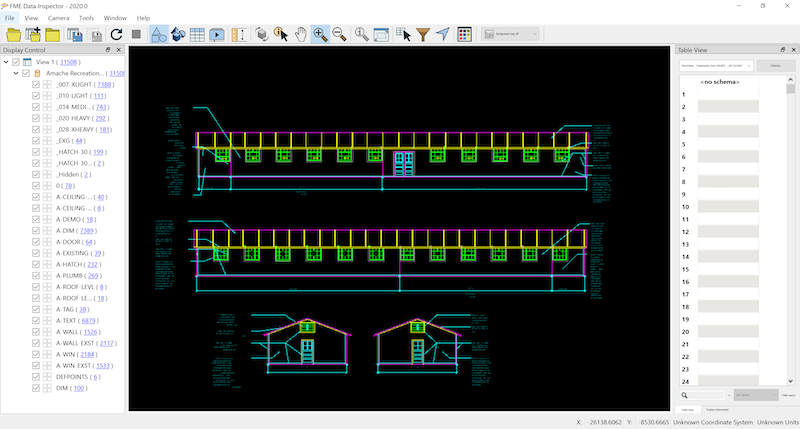

CAD floor plan. This drawing represents a recreation of the building, similar but not identical to a barrack.

GIS data. This Geodatabase contained several layers: the barrack and other building footprints, apartment polygons with information about their inhabitants, and an orthophoto.

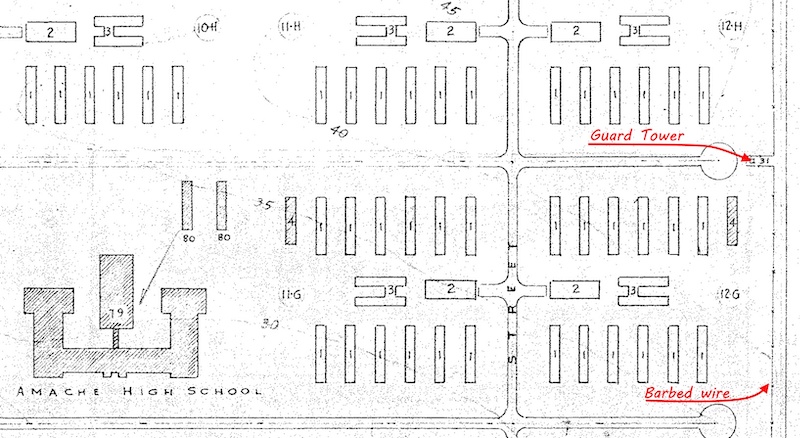

Historic scan. This is a simple JPEG image with no georeferencing. It contains information about the location of the barbed wire and guard towers.

To enrich the virtual world in a realistic way, I found a few models of old vehicles and other features from the WWII era.

Step 2: Data transformation processes

Pix4D to generate 3D models

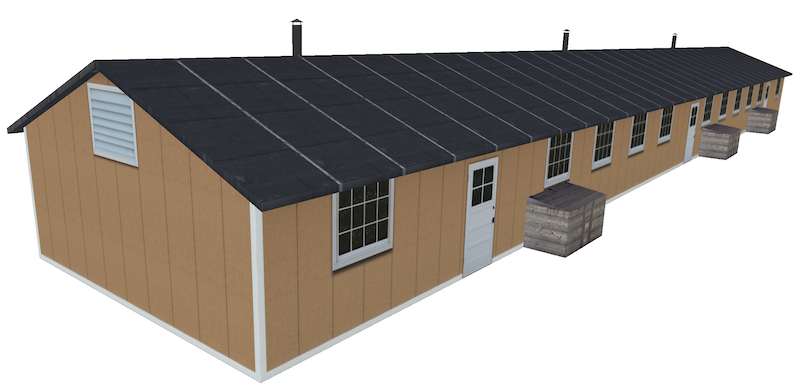

Jim supplied three models for the project, which he created with Pix4D, a professional photogrammetry software package for creating orthophotos, point clouds, and 3D models from drone videos. I created a simplified version of the barrack – for performance reasons, but also because in essence, the barracks are simple boxes with roofs. Two other models would require a lot more 3D modelling skills than I have, so I used them directly, after some mesh simplification in MeshLab.

This was the biggest step, which was done outside FME at the time, but since then we have built a suite of custom FME transformers available through FME Hub for connecting to Pix4D.

FME Workspaces

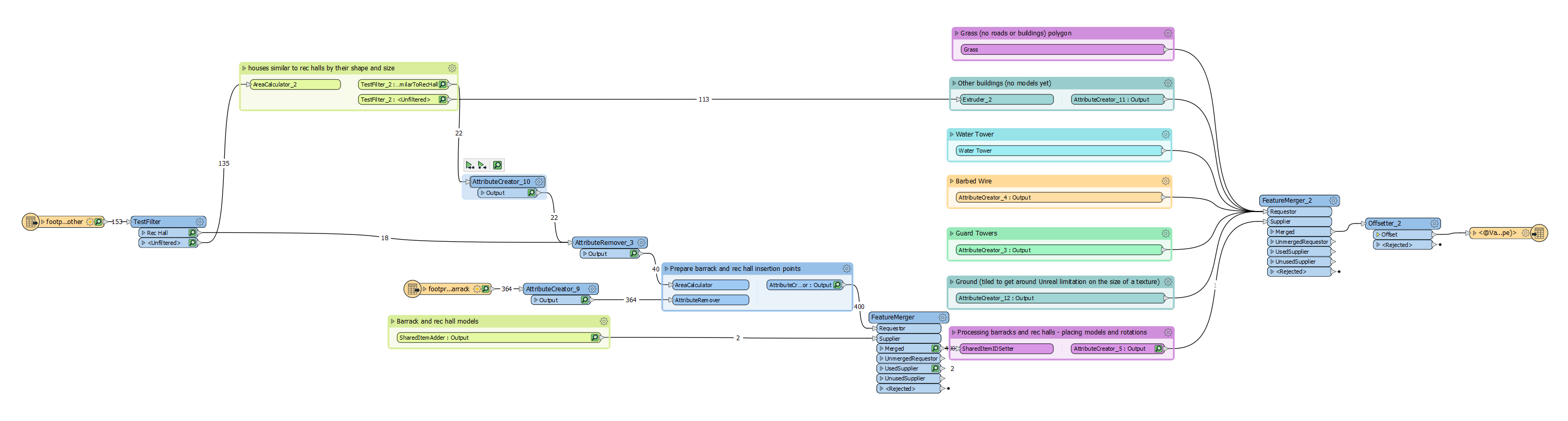

This is a relatively big project, and putting every part of it into a single workspace wouldn’t make the overall workflow efficient. Instead, I created several workspaces, each solving a small transformation task to allow the data to take the necessary shape gradually.

For example, I wanted to “sow” some grass over the ground in Unreal Engine. It can be done easily there, similar to using a bucket tool in Photoshop and other image editors; however, I didn’t have a polygon representing only grassed areas, so I created it from the ortho image with some simple Map Algebra analysis. As you saw in the ortho above, the roads are brighter than other parts of the landscape. If we set all the roads to nodata in the RasterExpressionEvaluator, transform the raster to polygons with the RasterToPolygonCoercer, and then clip out the building footprints from the polygons, we get an area for planting vegetation:

Creating a barrack and a recreation hall models required a lot of transformers, but this is just a few long chains of steps imitating a process of drawing – create a line, extrude it, now insert a vertex in the middle of the top segment and raise it by 5 feet, now offset the polygon it by 120 feet (this is how we create the side walls), apply a texture to this side, to this side, to the roof, and so on.

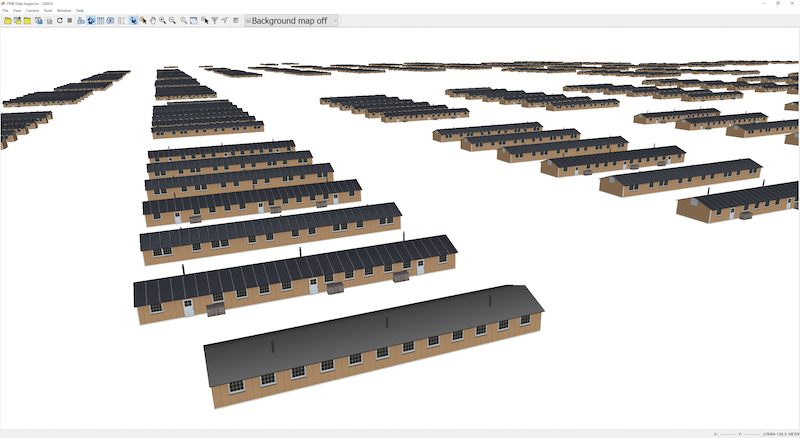

I didn’t try to recreate the buildings with all the construction details – no 2×4’s or proper window frames – I mostly relied on textures to relay the look of the barrack. Our final dataset contains over 400 buildings, we certainly want them to be light-weight for all the destination systems we have in mind.

Once the models were created, placing them where they belong required a single insertion point for each building and two transformers. The SharedItemAdder adds a geometry definition to the transformation, and the SharedItemIDSetter replaces points with the geometry instances.

Although I always try to do everything with FME, a couple of steps required manual digitizing. I used ArgGIS Pro for tracing the barbed wire and placing the guard tower points visible on the historic scan, which, in turn, had to be georeferenced to match other data layers. AffineWarper can take raster geometry along with the control vectors – lines connecting the same points on the historic raster and on one of the layers in the proper coordinate system. I used foundation corners as such control points.

For the barbed wire, I needed to make sure that the single flight of the fence is placed logically, so that, for example, the wires wouldn’t hang in the air at the corners, that is, there will be a pole at each turn of the fence. The guard towers had to know their orientation in relation to the fence for a correct placement – a job for the NeighborFinder transformer.

Once all the components were processed and prepared for the final assembly, one more workspace generated the output products:

Depending on whether we write Cesium 3D tiles, an AR model, or the Unreal Datasmith file, the final steps are different – Cesium is a projection and attribute aware format, AR file needs some logic for placing the model into real world, and the Datasmith file required extra processing within Unreal Engine.

Preparing the data for Unreal Engine

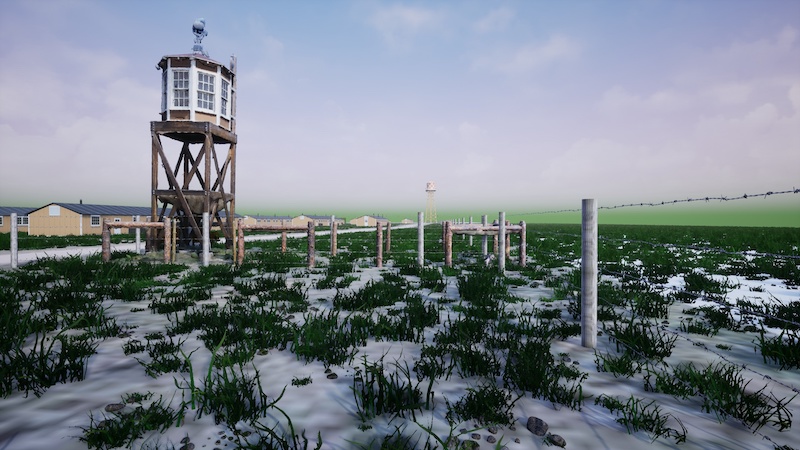

Unreal Engine comes with a few templates, which allows creating different kinds of games or real-time environments if we talk about non-gaming scenarios. For this project, a Multi-User Viewer template from UE4.22 was used. It allows exploring the world alone or in a multi-user mode where each participant can connect to the same world and see each other as avatars.

Unreal Engine gives unlimited possibilities for making the worlds look lifelike, but I made only several simple steps, such as setting collisions so that a user cannot go through walls and fences, I “planted” grass and “dropped” pebbles, and finally, added a few 3D SketchUp models of old cars and trucks.

Step 3: Output to immersive environments

Unreal Engine

Once the editing was done, I generated the shareable executable. Below are a few screenshots, the video, and the download link to the game itself:

Explore Amache in the Unreal environment – download for Win64 or Mac

Navigation is through traditional WASD keys, pressing “B” toggles the semi-transparent extrusions of the buildings, which have no models yet. “Space” turns on and off the flying mode. Keys from 1 to 5 are bookmarks, they teleport users to different locations around the camp.

Cesium

Here is the Amache camp in Cesium. This is a lighter version with barracks only, which allows the model to work well on most computers:

Explore Amache in your web browser via Cesium

FME AR

The latest version of the FME AR app can be made aware of the user location, and the model can be placed in the world properly relative to that location. I can’t easily go to Colorado and test it on the spot, so here is a version of the camp on the sports field of Aspenwood Elementary in Port Moody, British Columbia:

Explore Amache

The history of the 20th century is full of difficult and tragic episodes like the Amache concentration camp. Being born and raised in Russia, which went through turbulent years during both world wars and between them, I have a few such stories in my own family. It is our responsibility to preserve the memories of the past, and do our best to ensure that in the next 50 or 70 years the new generations of GIS specialists have only happy memories to depict of our time. This blog focused on the technical side of the project. To learn more about the important work of the Amache Preservation Society and see the results of this collaborative effort, visit the ArcGIS Storymap “Augmenting Amache”.

Learn more about this collaborative project in “Augmenting Amache”, an ArcGIS StoryMap

From a technical standpoint, we’ve learned that modern visualization technologies can be inspiring and impactful for specialists in different fields. We can easily create sophisticated 3D models and share them in many different ways: if you are standing in the place where your model belongs, you can use AR and the landscape around you the way you want; with a URL, you can share your 3D model over the web with anyone or embed a beautiful visualization in your webpage with CesiumJS; Finally, this promising and quickly developing area of non-gaming applications for gaming engines gives unprecedented power for creating shareable worlds limited only by your imagination.

From a project to visualize a future community hall, to a digital memorial, to an excursion through a remote location, we will certainly see more immersive worlds coming.