Audio manipulation in real time using point clouds

At any given time during the year, we have around seven to ten co-op students on our team here at Safe. Part of the co-op requirement is that the students complete a report on something they worked on during their four or eight month term, or make a presentation as a Lunch & Learn.

Recently, one of our co-ops gave a presentation on something quite remarkable that he accomplished with FME Desktop. Passionate about music, Bruno Vacherot combined his interests and skills into something we haven’t seen before: FME audio manipulation. (Better than my co-op presentation a few years back. Mine somehow involved ponies.)

Yes, Bruno used geospatial technology to read audio files, modify them and add effects, and write out the resulting .wav file. Not only that, but he also accomplished this with incredible efficiency, processing the audio in real-time speed. How? Using point clouds.

Let’s look at how this was done in FME Workbench.

Reading WAVE files as data points

A .wav file is made up of a header followed by binary information. If you’re thinking in spatial terms, as any noble FME user would, then this binary information is easily represented as a bunch of points. X is time and Y is amplitude. Because we’re dealing with a large volume of points, it makes the most sense to process the data as a point cloud.

This point cloud is more of an information cloud of time and amplitude values, rather than a point cloud in the traditional sense with X and Y values. But by using FME’s flexible and ultra-fast point cloud capabilities, we can process and visualize this data like we’re used to, treating it much like a LiDAR dataset.

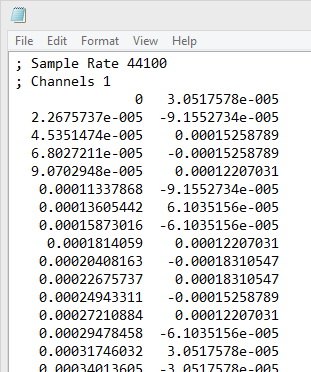

To prepare the .wav file for reading by the Point Cloud XYZ Reader, convert the WAVE data to DAT using the Sound eXchange library.

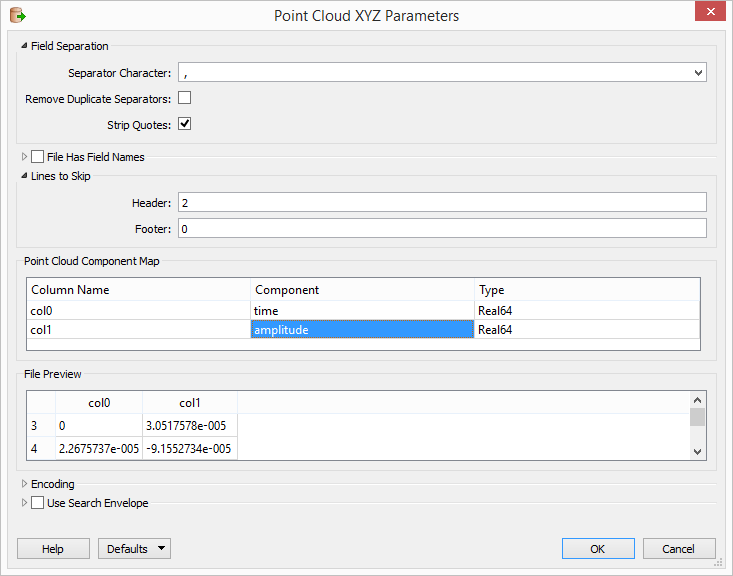

In FME Workbench, you can then use the Point Cloud XYZ Reader to read the data. In the reader parameters:

- Choose the separator character (Bruno converted the .dat file to comma-separated values, but you could also choose the tab character as the separator).

- Skip the first two header lines in the file.

- Specify to read the time and amplitude values in the point cloud as components, in the same manner as intensity, color, etc., might be stored in a typical LiDAR scan. You could also store time and amplitude as X and Y values, which makes for fun visualization in the FME Data Inspector, as you’ll see below.

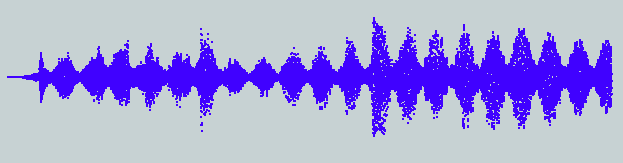

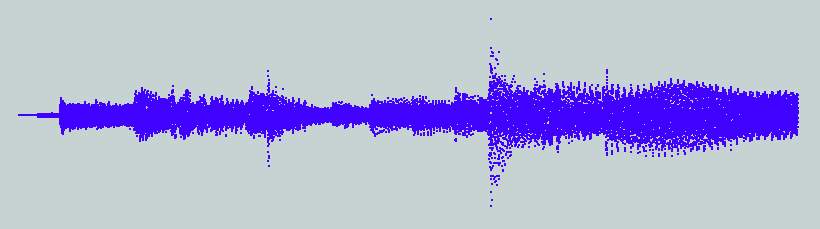

Opened in the FME Data Inspector with the columns treated as X and Y components rather than Time and Amplitude, you can see the audio file as a point cloud:

Isn’t that beautiful?

Here’s what it sounds like:

Manipulating the audio points

After reading in the point cloud, manipulating the audio data involves expression evaluations, merging, etc.—essentially, performing math on a point cloud. The FME workspace involves various point cloud transformers, like the PointCloudStatisticsCalculator, PointCloudExpressionEvaluator, PointCloudMerger, and PointCloudComponentCopier. At the time of writing, FME has 22 point cloud transformers.

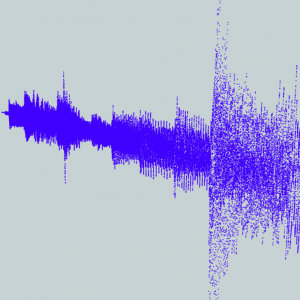

By thinking about the audio visually, as a series of points in 3D space, many transformations became possible. Here are a few examples:

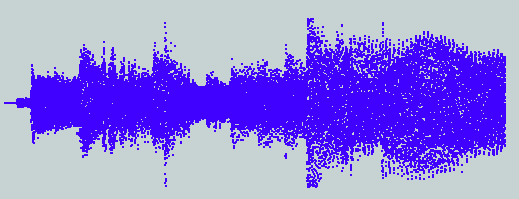

- Mono / stereo manipulation: Duplicate the mono file to create a stereo point cloud with two channels, then modify each independently before merging them back together.

Listen and compare to the track above:

- Dynamic range compression: lessen the distance between loud and quiet parts.

- Delay: scale and offset the points.

- Reverb: chain delays together, giving the effect of being loud in a large room.

- Tremolo: change the volume as it plays.

- Distortion: emulate a square wave using the input signal.

- Normalizer: set the maximum volume.

It’s interesting to note that no matter what the manipulation, FME was able to process the audio faster than it takes to play the file. This implies that point cloud input could be processed in real time.

Converting .dat information back to .wav

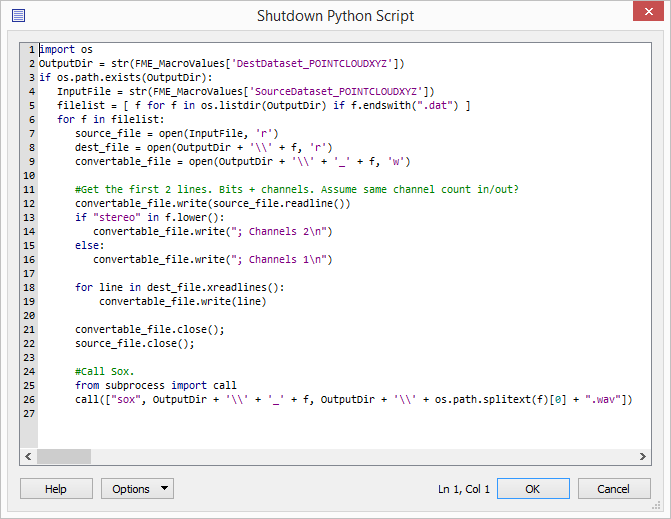

When writing the output, we want to export it as a playable file again, so we’ll use Python and the SoX library to convert the DAT information back to WAVE. A couple of Python calls will write the header information, and a call to the library will handle the data conversion. This is done in the Shutdown Python Script in the Workspace Parameters.

Inspiration for the future

Thanks to a co-op’s creativity and FME’s point cloud transformers, this audio manipulation project unveiled exciting new territory and showed us just how efficient our point cloud technology is. In Bruno’s first project iteration, he treated every data point as a feature, with the result that a few-second sound clip took several minutes to process. By switching to a point cloud data structure, he was able to process the sound files in real-time speed. This is huge for performance. He was also able to leverage our point cloud transformers to manipulate the data in efficient and creative ways.

It certainly got us thinking. If FME can process audio in real time using point cloud technology, what other possibilities could there be?

As with any project that pushes the boundaries of FME, some ideas for improvement came out of it. For instance, it would be nice to keep track of what was done previously so we can make a calculation later on—like manipulating the audio data based on known frequency. Further, maybe the Point Cloud XYZ writer would benefit from the ability to append to the output file rather than overwrite it.

We’re always excited about new ideas for future FME releases, so if you have ideas for FME 2016, we invite you to contribute to our Trello board.