Databricks is a powerful cloud platform for big data analytics that unifies data warehouse and data lake concepts into one platform: the data lakehouse. But what if your data isn’t ready for analysis, spread across disparate systems and requiring cleanup, validation, and transformation before it can be ingested? That’s where FME comes in. FME transforms, integrates, and delivers data, making it analytics-ready for Databricks.

For a deep dive into this topic and live demos, watch our webinar recording, How to Integrate FME with Databricks (and Why You’ll Want To).

“FME brings structure to chaos so Databricks can focus on computation.”

While Databricks excels at scalable computation and analytics, FME specializes in data preparation, transformation, and interoperability, especially for spatial and enterprise data. Together, they enable:

- End-to-end data integration with minimal coding

- Data quality improvements before ingestion into Databricks

- Workflow automation for repeatable, scalable processes

- Seamless integration with GIS tools, databases, web services, and more

In FME, users can connect to Databricks with a Reader or Writer.

- Databricks Reader: Connects to Databricks, allowing for SQL queries and data extraction from Delta Tables.

- Databricks Writer: Writes data into Delta Tables, supporting operations like appending, overwriting, or upserting data.

In FME Form, workflows can be built to transform, pre-process, and validate data. FME Flow can be used to run workflows on demand, on a schedule, or in response to a trigger.

Scenario 1: Moving Data In and Out of Databricks with FME Web Apps

With FME Flow, users can build Web Apps that empower users of any technical skill level to upload data, trigger workflows, and interact with Databricks pipelines, securely and without manual processes. This democratizes data access and simplifies collaboration.

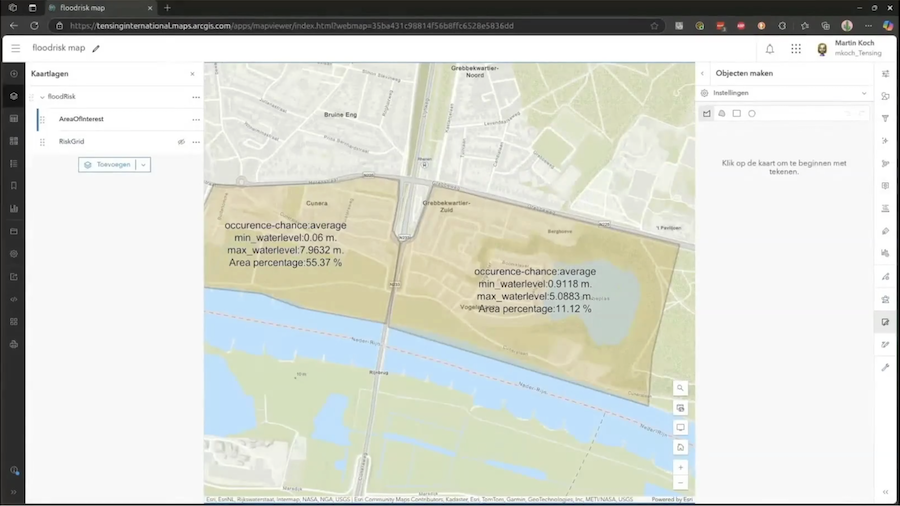

Scenario 2: Enabling Spatial Analysis with ArcGIS + Databricks

Databricks doesn’t natively support spatial formats, so FME acts as a bridge that converts and prepares geospatial data, enabling a smooth data exchange between Databricks and ArcGIS. This unlocks geospatial insights for Databricks’ powerful and scalable engine.

Scenario 3: Creating a Data Virtualization Layer

FME can connect to multiple live data sources and present them as a unified virtual layer within Databricks. This eliminates duplication, simplifies data governance, and allows real-time data access, all without writing complex integration code.

Automation, Trust, and Interoperability

FME’s automation capabilities allow you to schedule jobs, respond to data events, and integrate with third-party systems, freeing teams from manual ETL tasks and reducing operational overhead.

It also supports trusted data pipelines, with built-in validation, QA/QC, and transformation logic that ensures data entering Databricks is clean, standardized, and fit for purpose.

Finally, with support for hundreds of formats and systems, including Oracle, GeoJSON, Excel, cloud storage, APIs, and more, FME is a universal connector across your data ecosystem.

This integration will benefit anyone who is:

- Already using Databricks and struggling with upstream data issues, such as quality or complexity

- Working with spatial data in a Databricks environment

- Wanting to build automated, scalable data pipelines

- Looking to connect systems and deliver value faster without writing custom code

Learn More

Databricks provides the processing power. FME ensures your data is ready for it. Combined, they create a flexible, scalable, and future-proof analytics ecosystem.